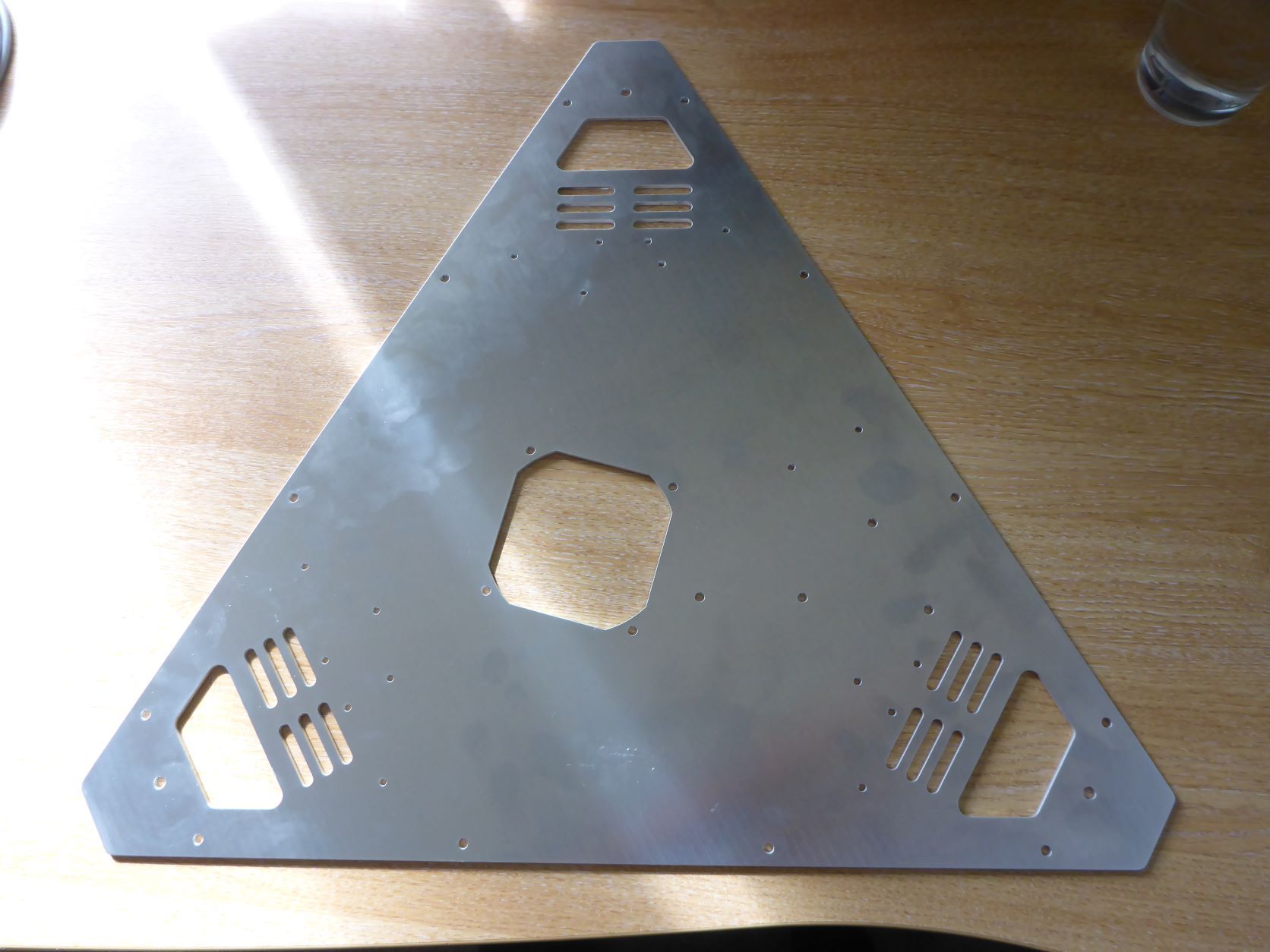

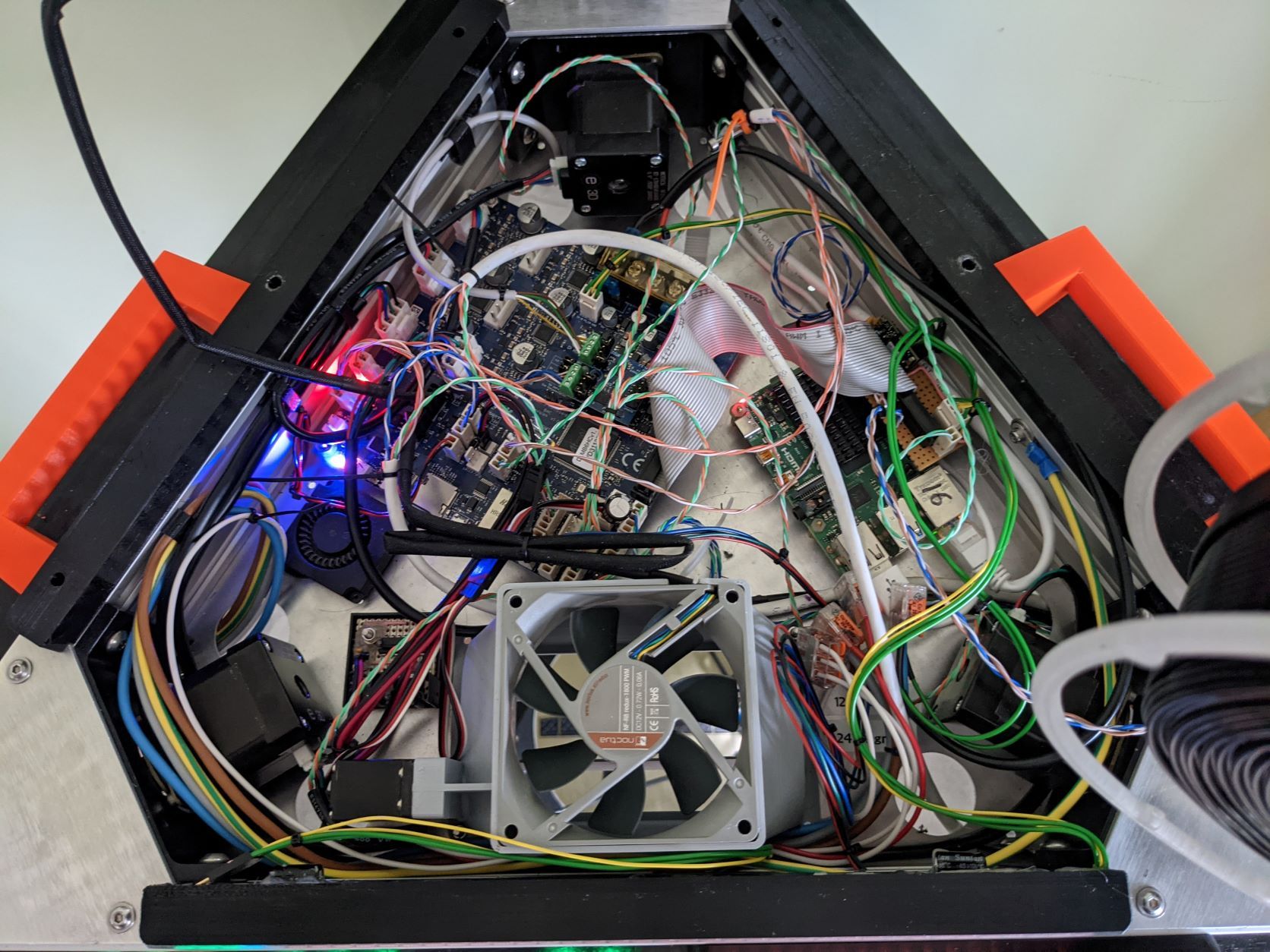

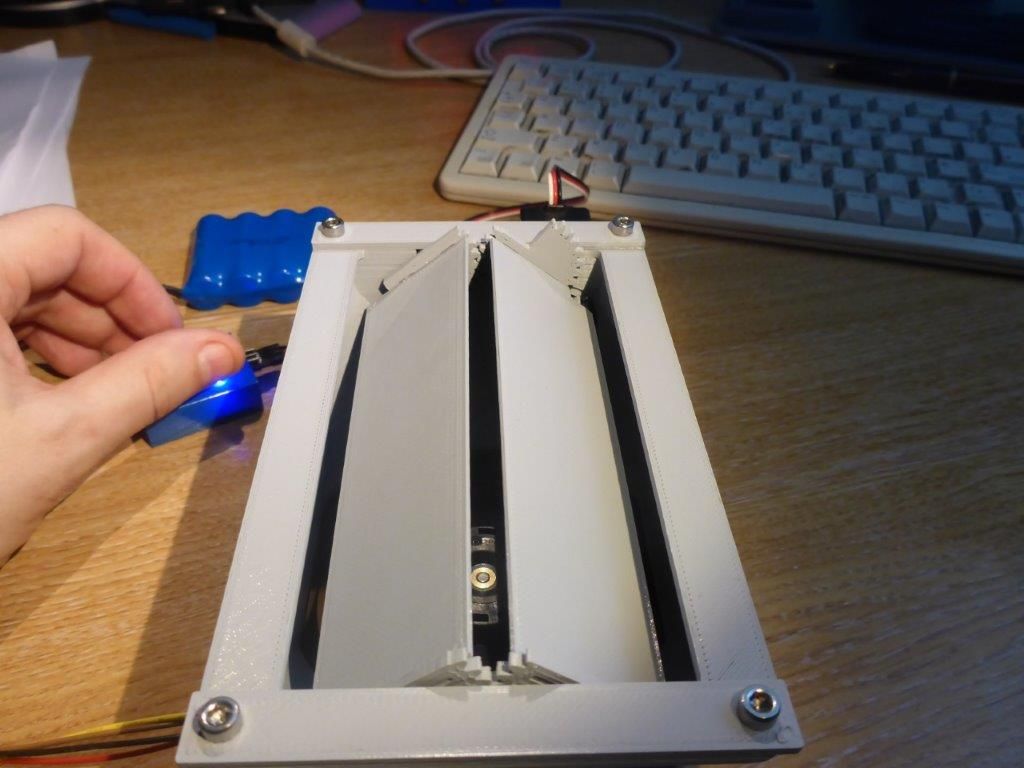

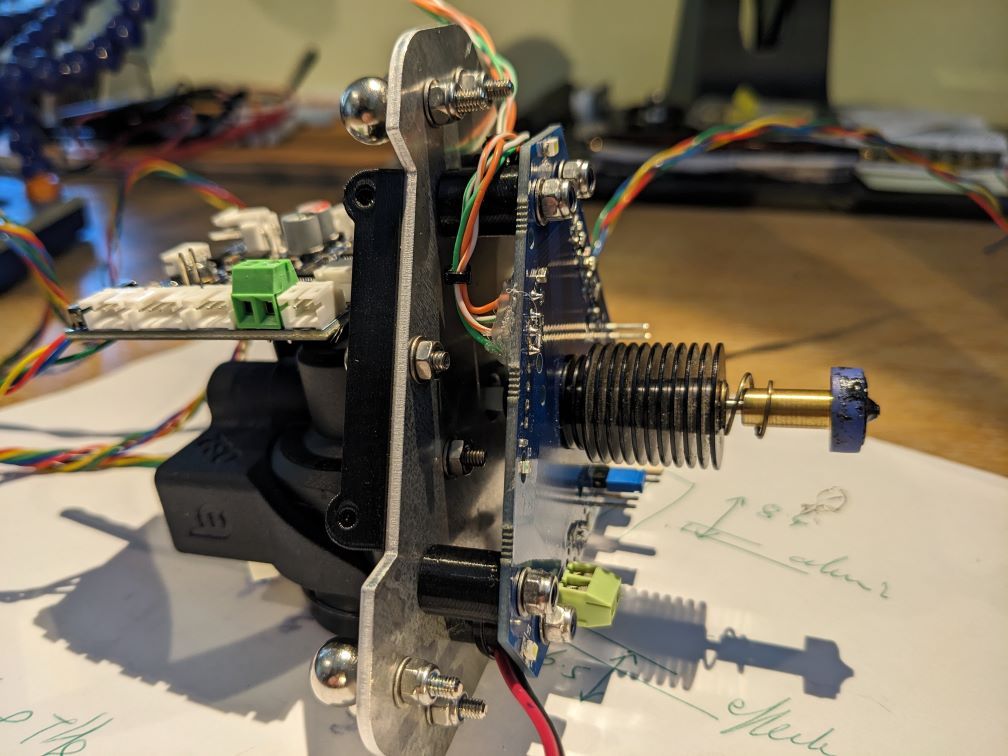

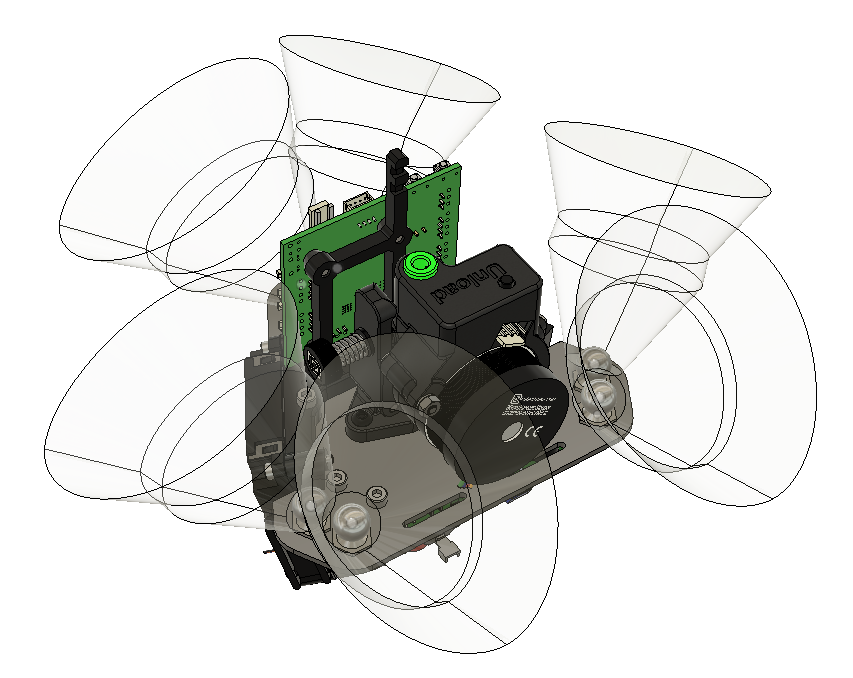

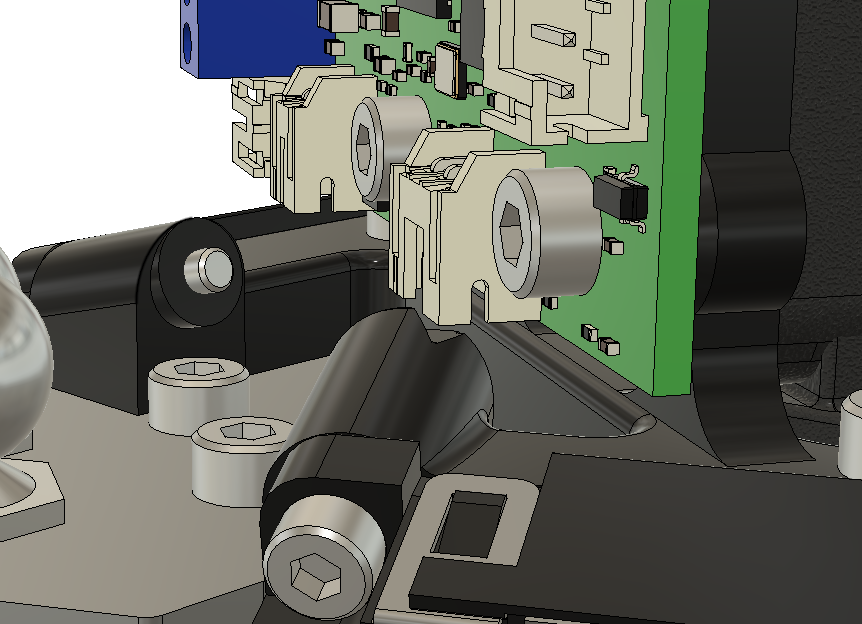

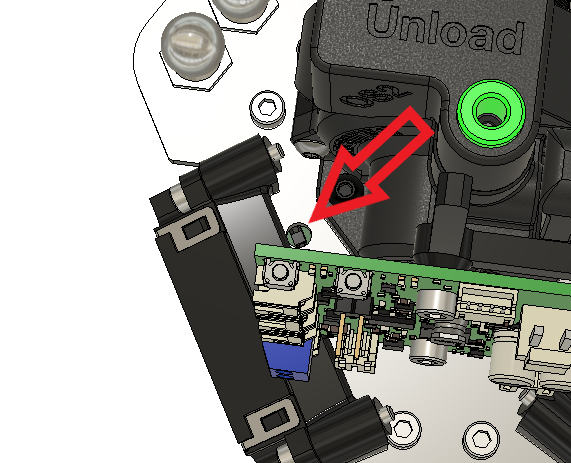

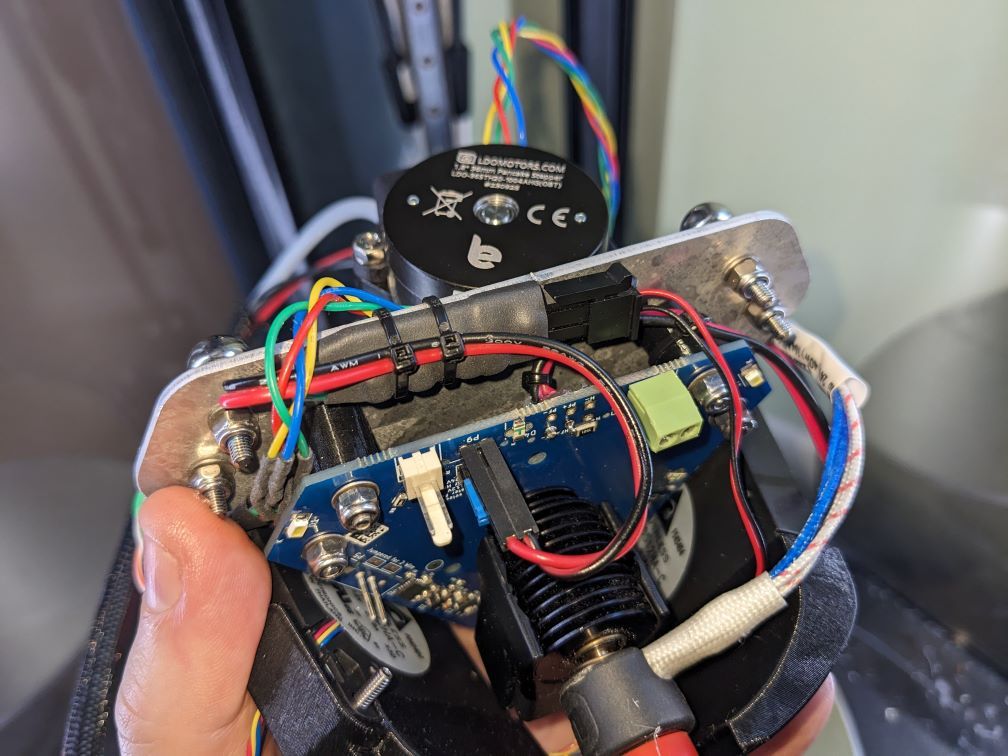

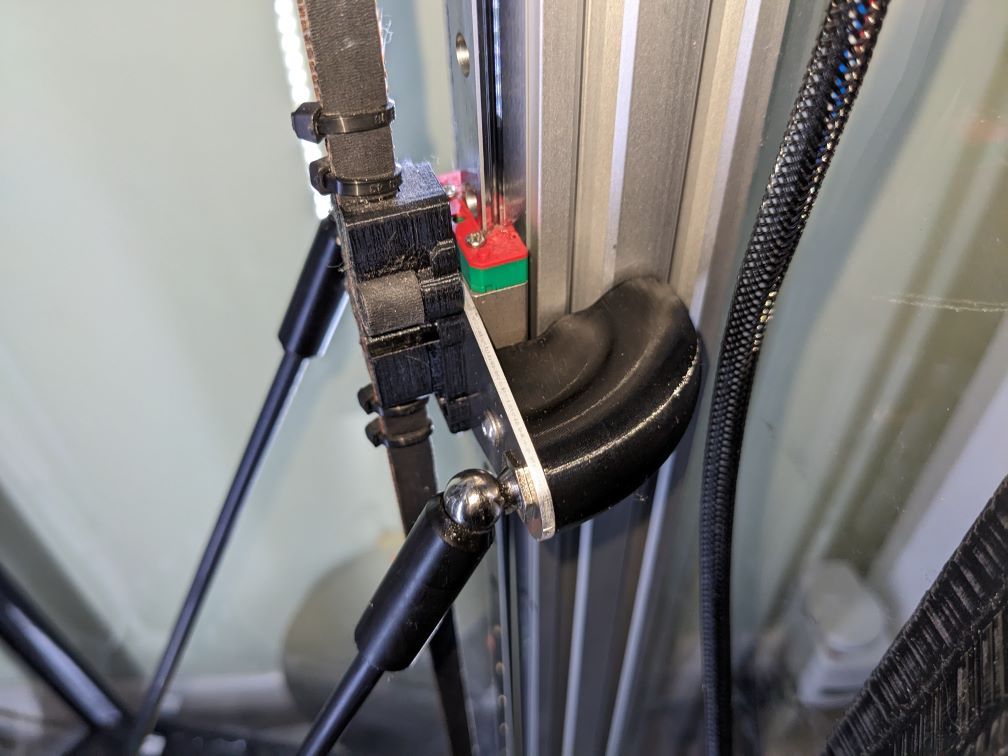

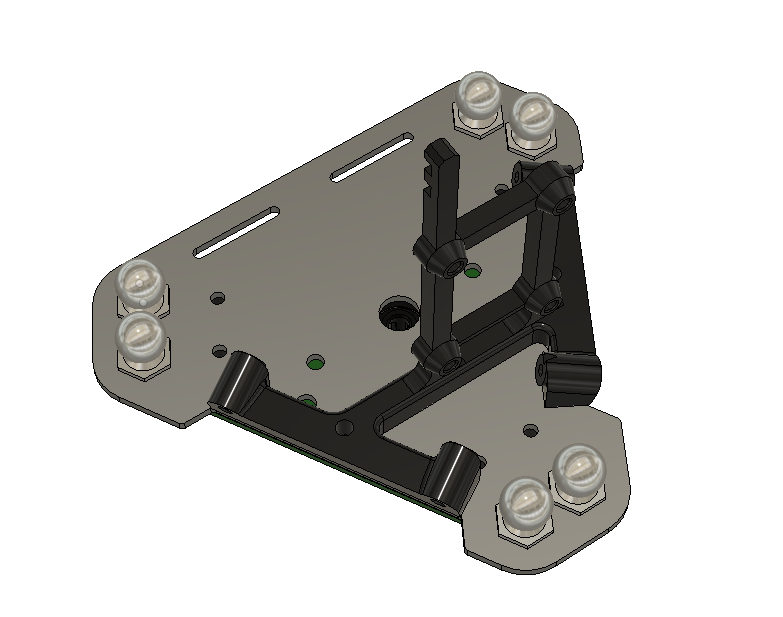

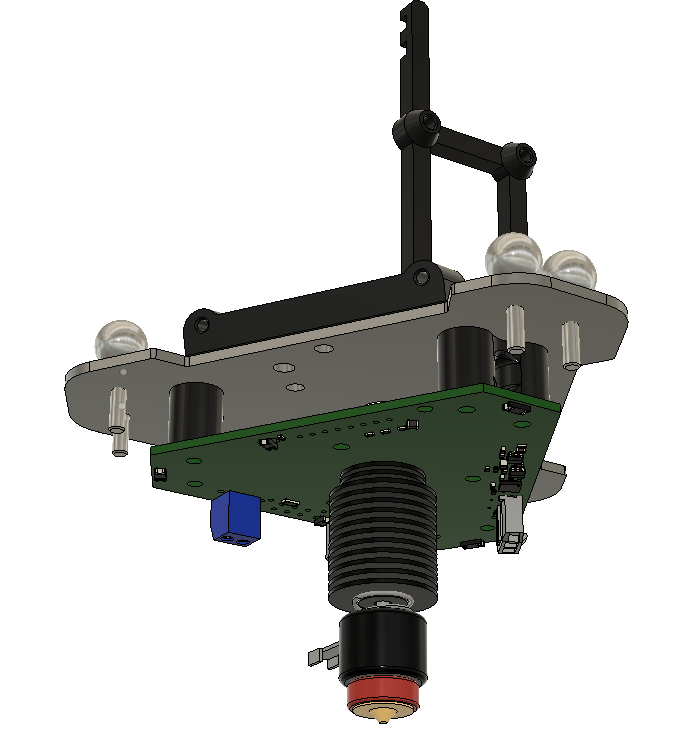

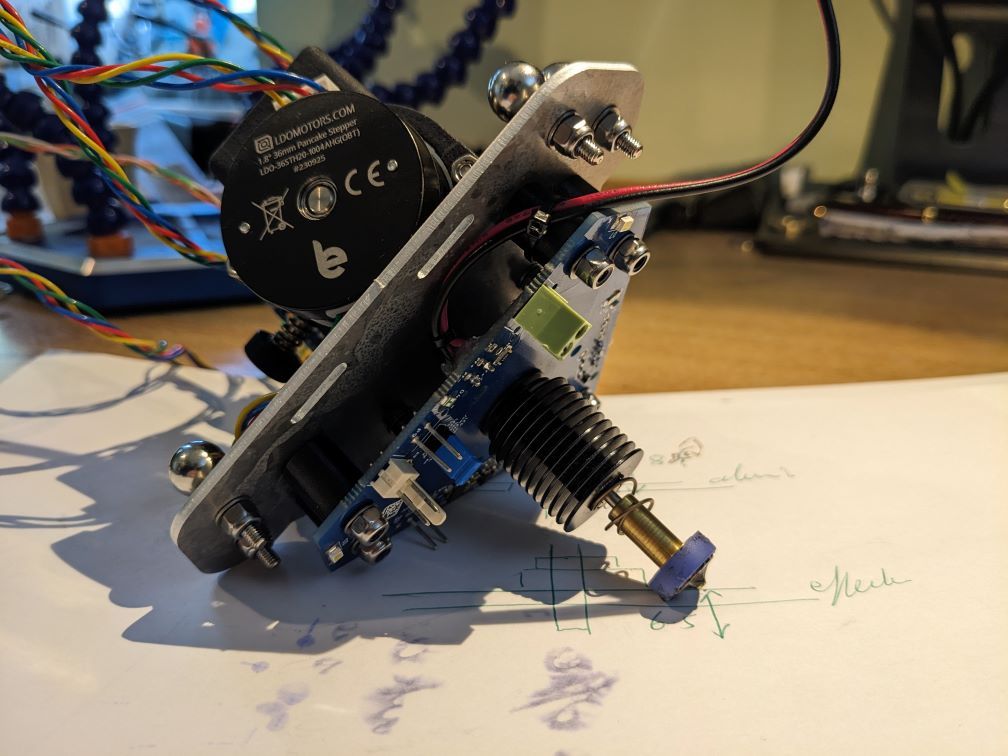

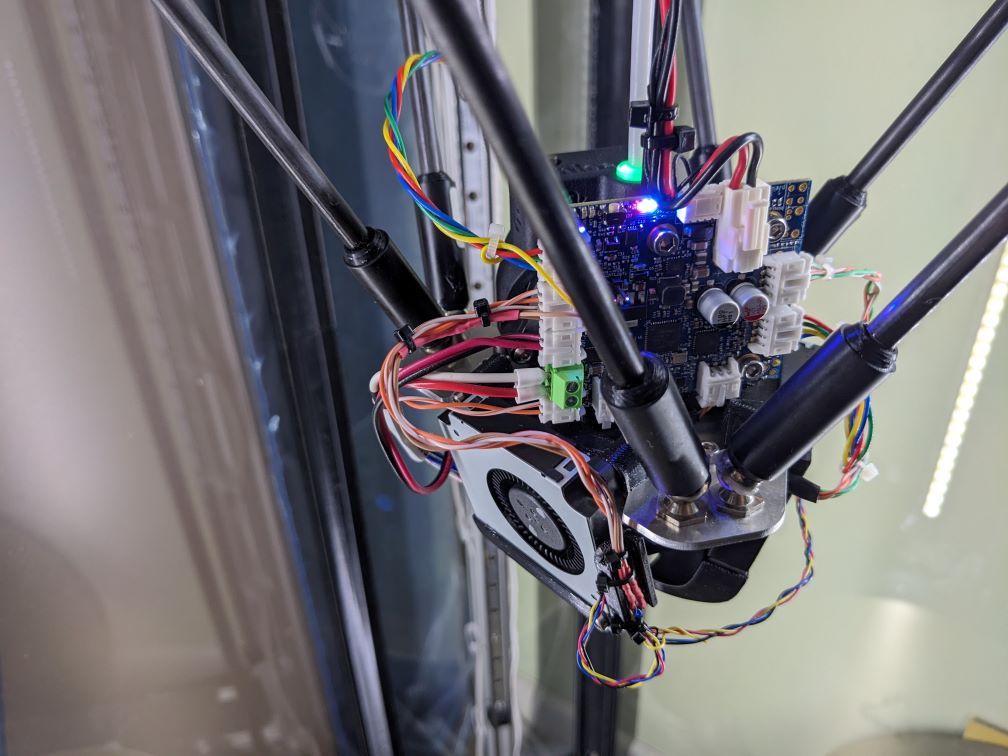

I've been assembling a printer from some of the parts of a Prusa i3 Mk2.5, but using a Mini5 as the control board.

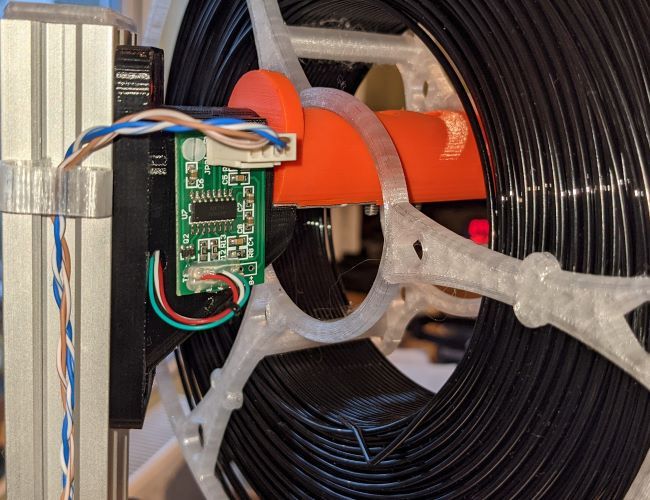

The inductive sensor I have is a (completely genuine bought from Prusa) 'pinda' V2, which is acknowledged to have some temperature dependence, but has a built-in thermistor to permit corrections. I didn't trust the Pinda V1 at varying temperatures, and have always done all bed preheat with the printhead as far from the bed as possible to minimise heat effects.

I should acknowledge https://forum.duet3d.com/post/302443 which specifies some thermistor parameters (100k, B=3950) and a temperature coefficient (0.02) because (spoiler!) although I now think the parameters for mine are very different from that, it was a useful part of getting to this point.

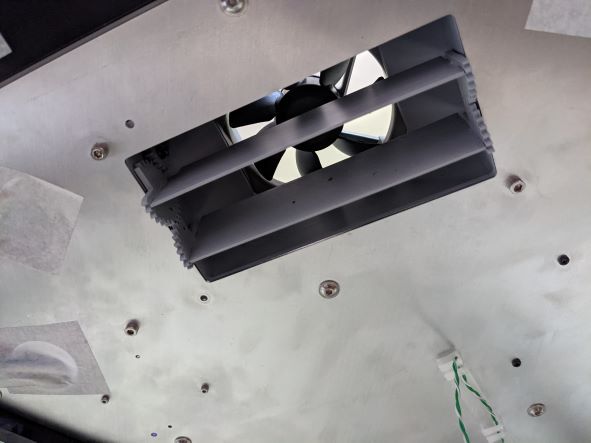

With RRF it should be relatively easy to confirm calibration, because you can probe, heat up the probe by heating the bed (my printer is in an enclosure, so this is fairly effective), then probe again and see what happens. Indeed, the stock Prusa had a function to calibrate something like this - sitting the head just above the bed for a few minutes while it heated and periodically probing. However, it turns out to not be that straightforward.

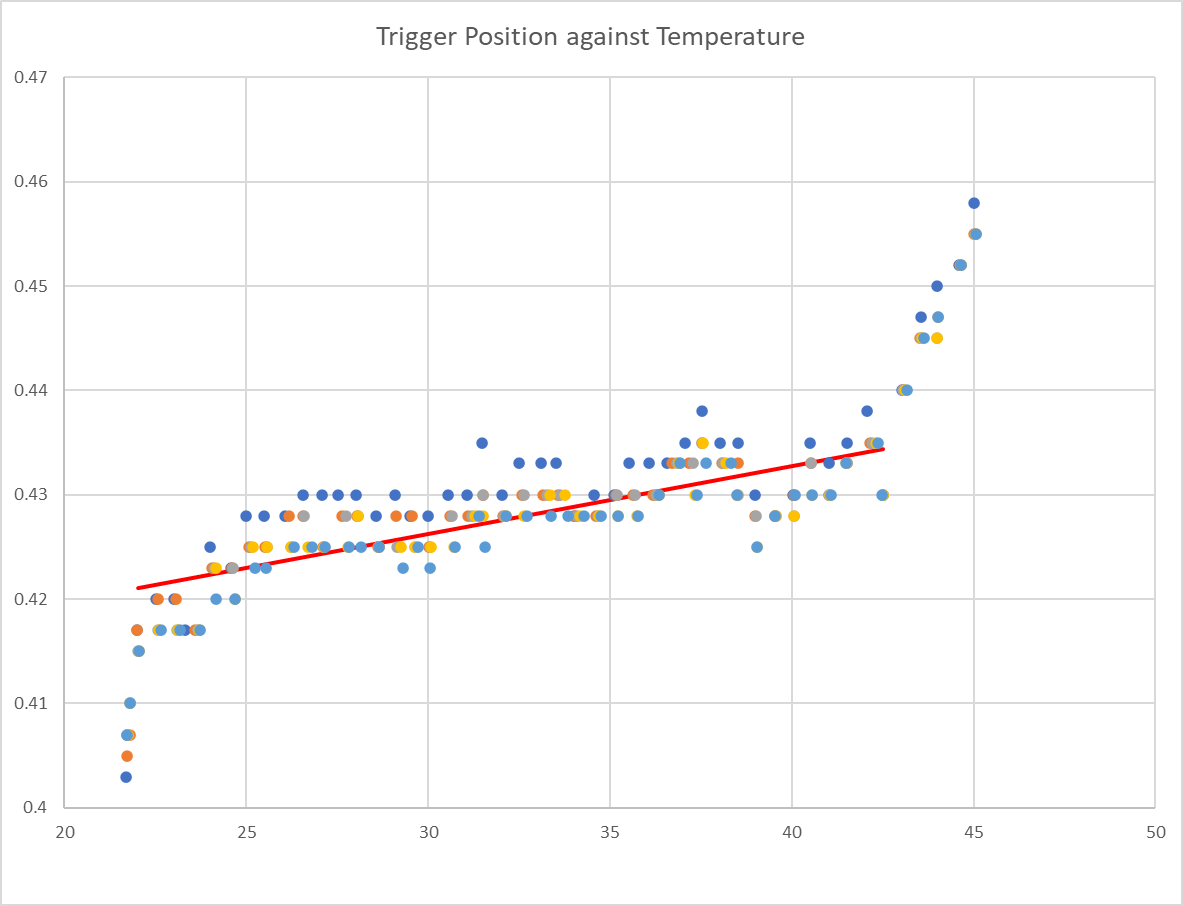

My first pass did exactly that - put the nozzle just above the bed, switch the bed on, probe every time the pinda gets half a degree hotter than it was and see what happens. I got this:

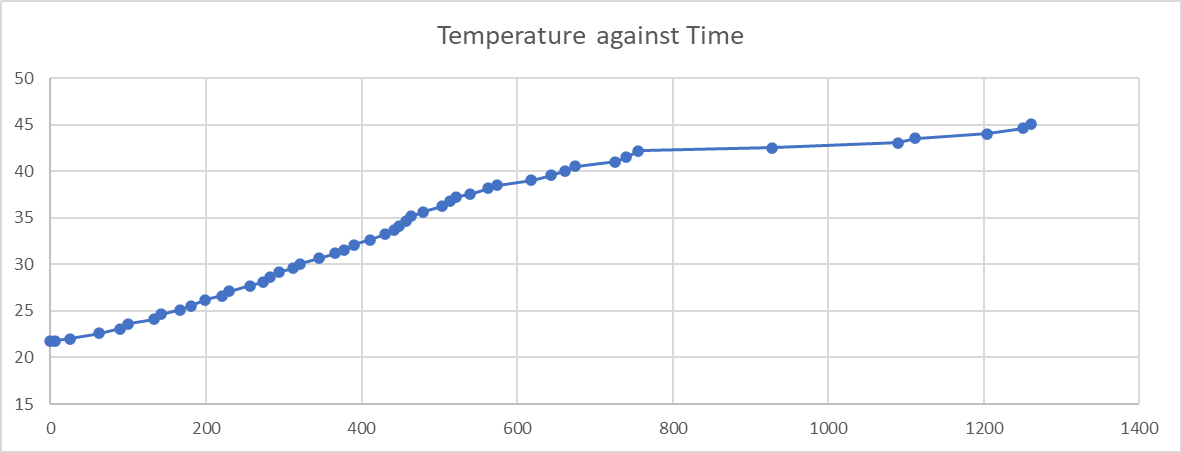

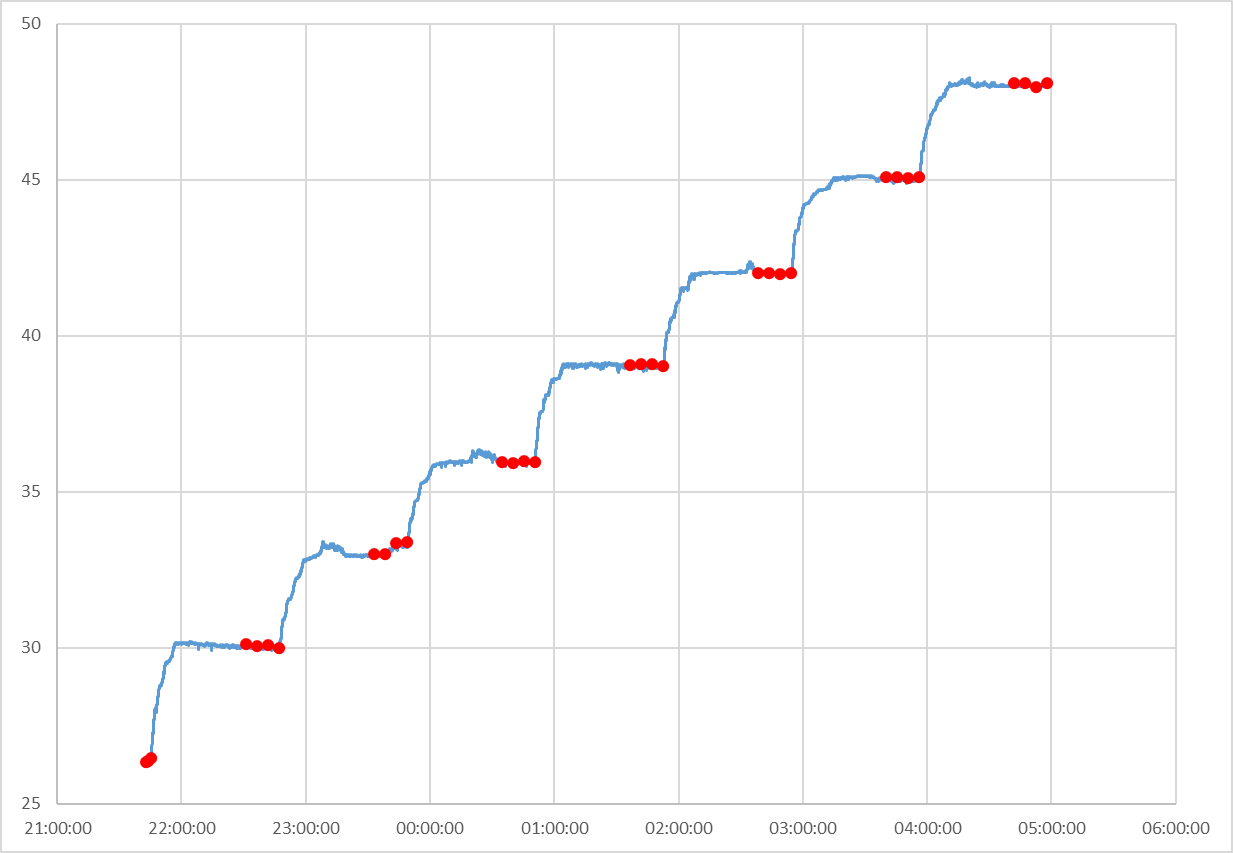

Interesting observations are linearity-ish through the middle, but it's much lower coefficient than the post referenced above (in this case about 0.001 - one twentieth) and the non-linearity at beginning and end. After a number of runs of this I noticed something and when I return to that first data set it's there to see too. This is the graph of temperature against time for that run:

So that dramatic change in apparent temperature coefficient from about 42 degrees correlates with a dramatic change in time it takes to get to each temperature increment. This leads me to think that the thermistor in the probe heats up faster than the electronics - so when the temperature is rising (fairly) quickly, the electronics are actually cooler than reported and so exhibit less apparent temperature dependence.

So then I did it again. This time I coded a more complex heating algorithm - it uses PID (but not very tuned) to adjust the bed setpoint temperature as if it was the power input and tries to hold the pinda at a constant temperature for 40 minutes before taking four sets of probe readings at 5 minute intervals, with 3 probes per set and then moving on to the next temperature.

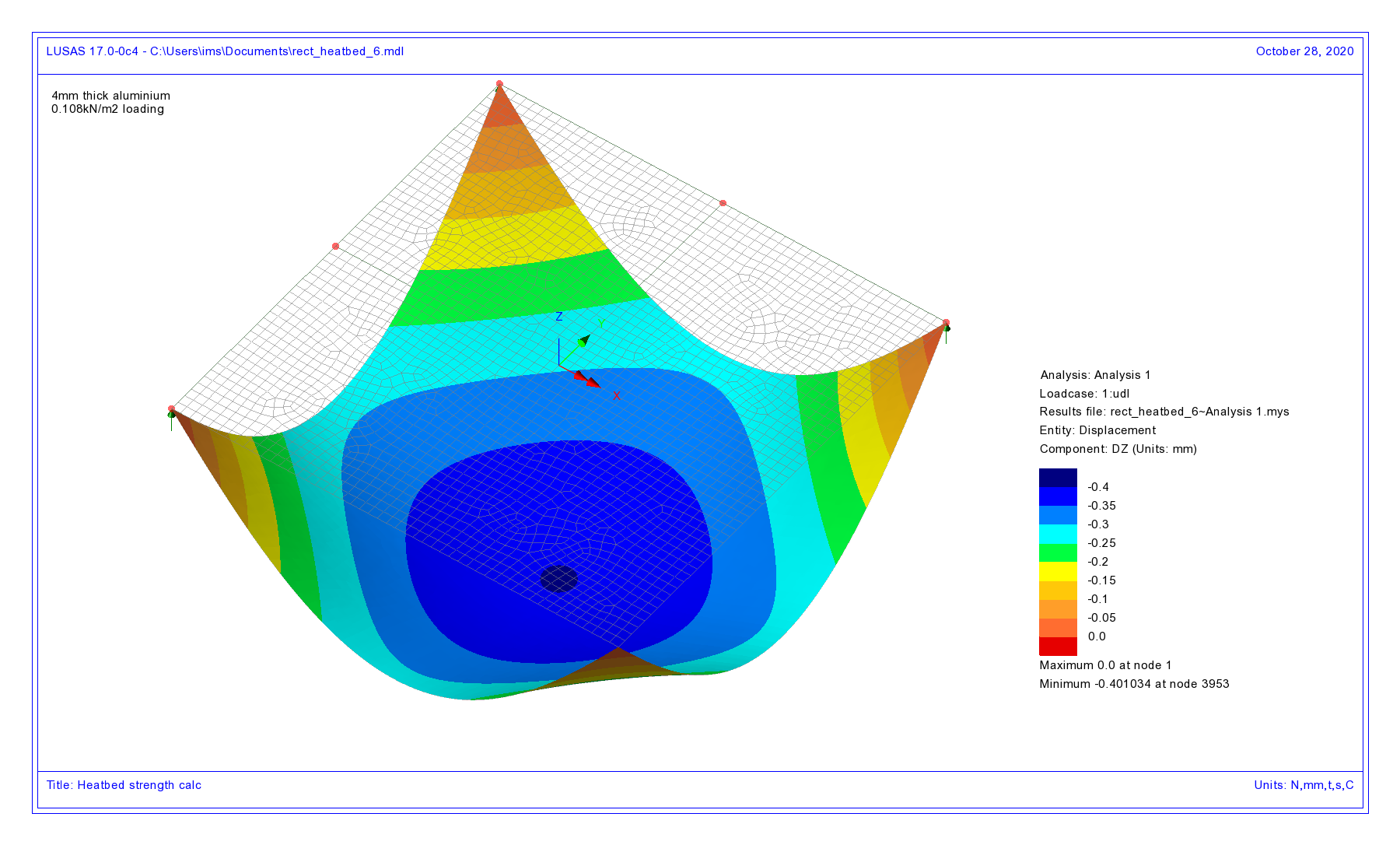

This does of course come with the caveat that I’m assuming my bed doesn’t itself move at different temperatures. I suppose I could set up a dial gauge on it (but the only one I have has lost its spring, so not terribly useful).

This is fairly successful (with some cheating on the first cycle to avoid a massive over-temperature spike while the integral term initialises / stabilises) and I typically get a temperature v. time from the pinda thermistor like this (red dots are where probing was done):

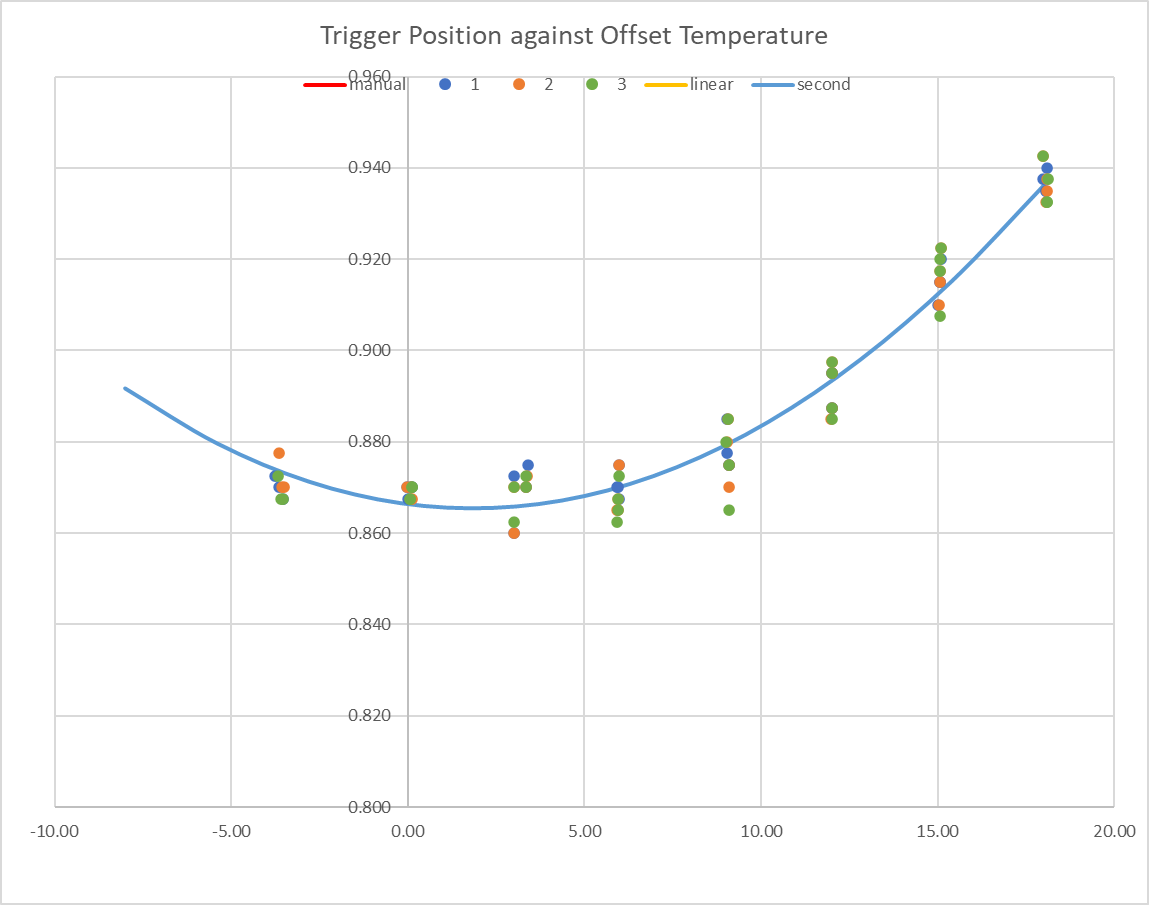

Now I get a more consistent temperature coefficient, which is distinctly non-linear, but which matches quite nicely to a 2nd order polynomial that RRF can accommodate.

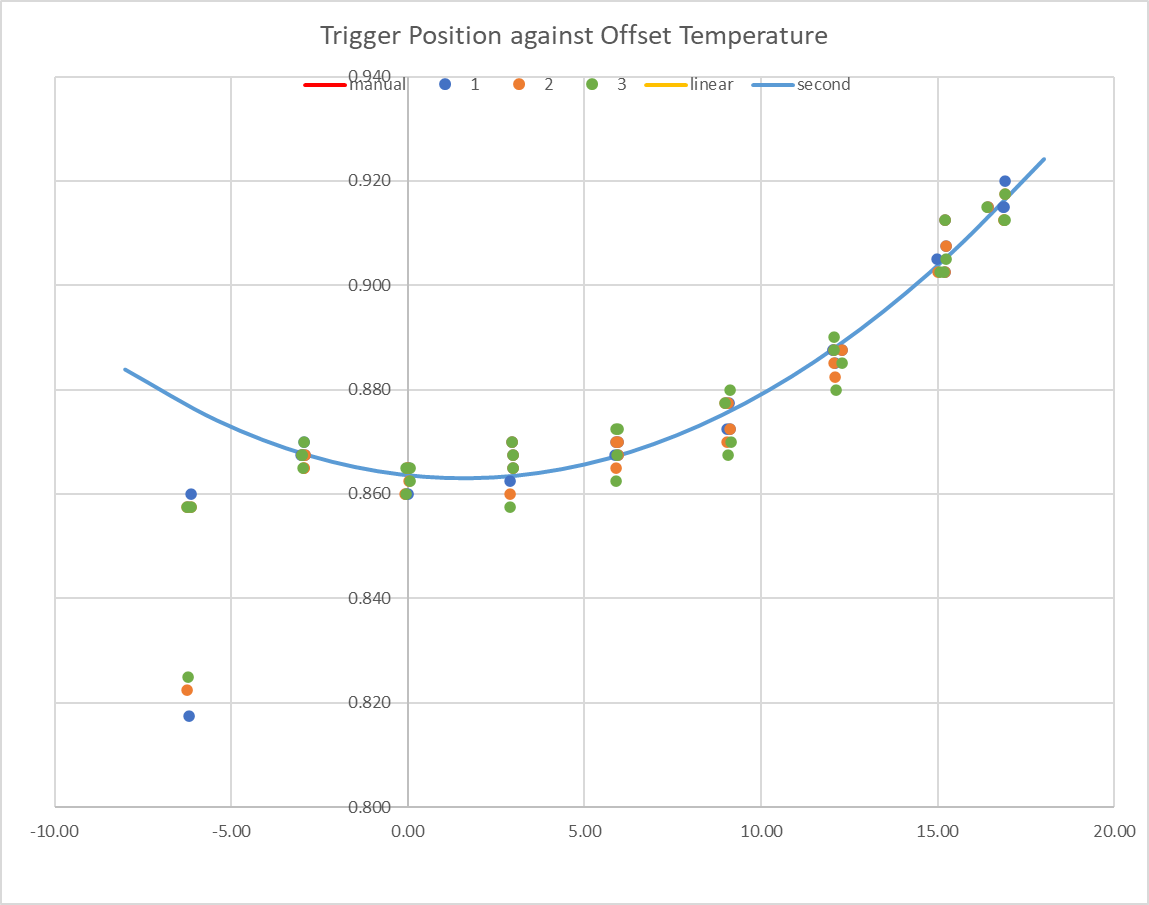

The first readings (before the bed is heating at all) are often off the curve, but by varying degrees. I haven't come up with any good hypothesis for this. This is another run, with an unusually large first set difference.

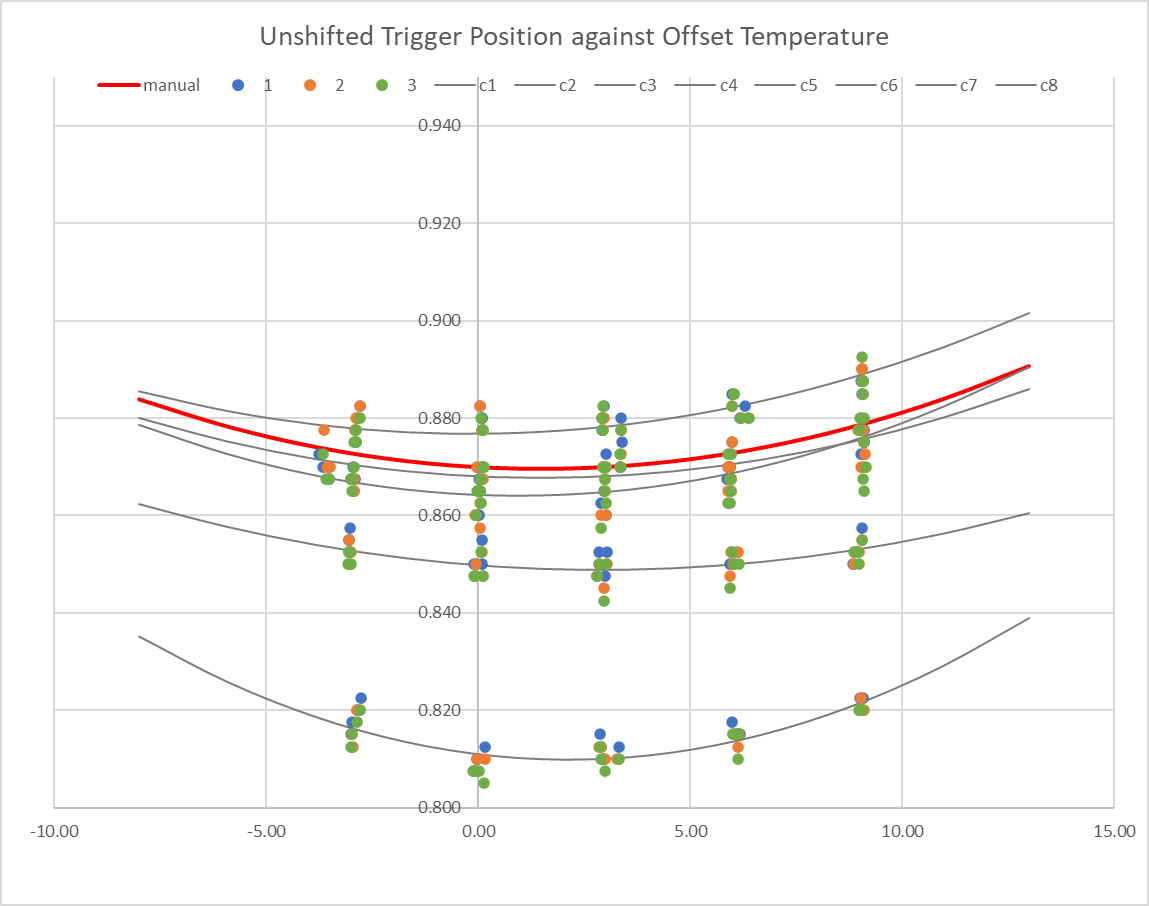

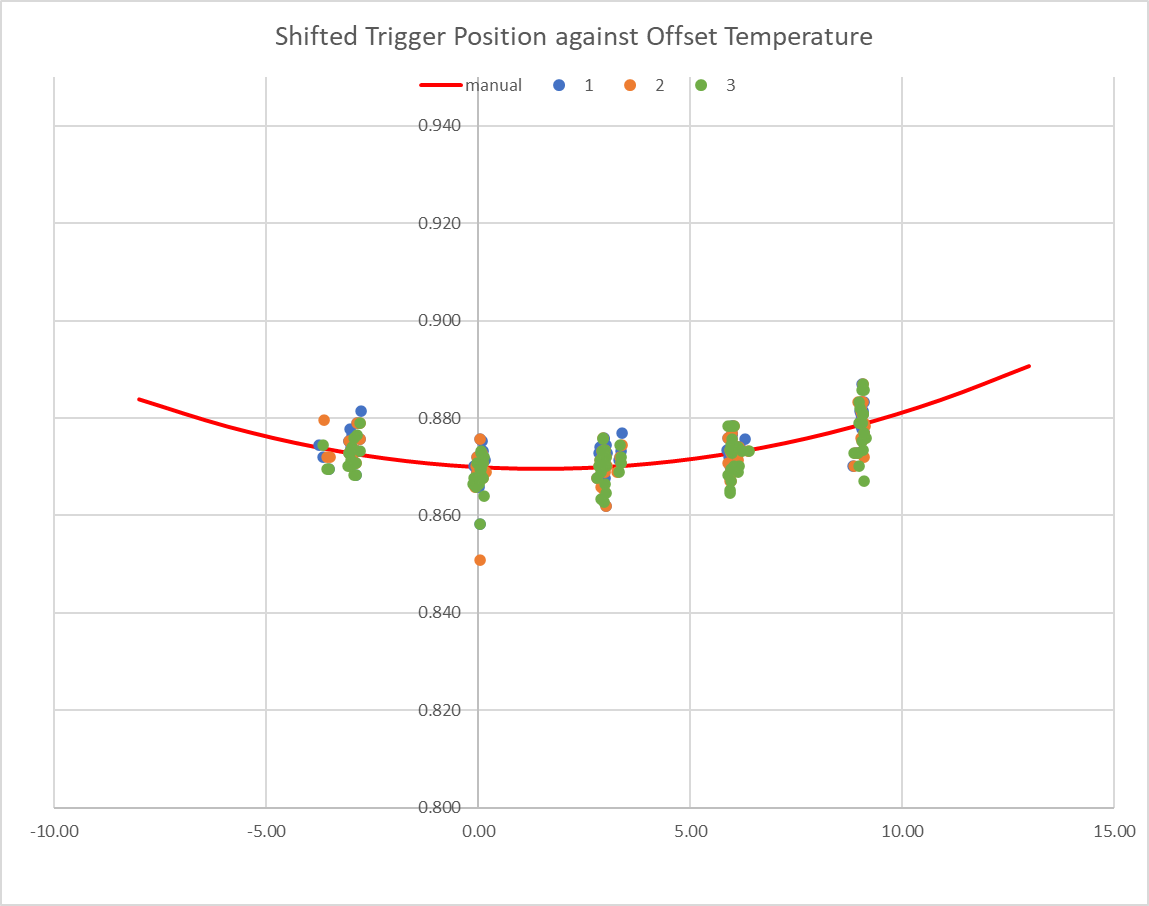

If I neglect the pre-heating data and put multiple runs on one graph, I find that the behaviour is relatively consistent. I first discovered my homez approach was not giving me a consistent result, which manifests as all the points in the test-run matching the curve relative to each other, but offset by an absolute amount up up to 0.06mm low (i.e., the homez has apparently set up the axes too high). I’ve changed my homez to do multiple head movements much slower, and that seems to have fixed the issue.

Also, I’ve set zero compensation for the duration of this testing, so actually the homez is setting to 0.87mm regardless of probe temperature at the time, which will introduce the error I’m trying to quantify into my testing.

For both these reasons, I take the data from a single test run (typically 32 G31s in eight sets of four readings at each temperature), fit a second order polynomial, find the shift that would bring the polynomial to my calibration value (0.87mm at 30C), then apply that shift to all the data in that test. When I do this, the resulting scatter across all the tests (five runs and counting) is about the same as the scatter within any one test.

I'm curious how the Prusa firmware manages to do a calibrate in a few minutes if this time dependency exists, but possibly it doesn't actually calibrate directly, and just takes some readings that it uses to adjust the built-in calibration.

This is fairly niche information, I appreciate, but I thought I'd post in case anyone is interested. Actually, my main conclusion is that I didn’t really need to bother, because my conclusion here is that over a 15C range where I’m interested (most of my prints start with the probe at about the same temperature), the trigger height only varies by 0.01mm (ish).

From reading on (mainly Prusa) forums, I suspect that Pinda v2 probes are possibly not only temperature-dependent but also show quite widely varying dependence – there are a number of reports of people changing probe and suddenly finding calibrating the first layer much easier or much more difficult. Possibly I’m lucky with a low-variation one.

Even without such a (hypothesised) variation, this specific data may not be relevant to anyone else, because the apparent temperature correlation is a function of the thermistor properties (I have no idea how consistent the thermistor is), the electronics actual dependency and the behaviour of the bed (i.e., my bed likely moves differently in response to temperature than anyone else's - it's initially a Prusa heated bed, but I've modified it a bit and it's not on a Prusa frame).

Conclusion

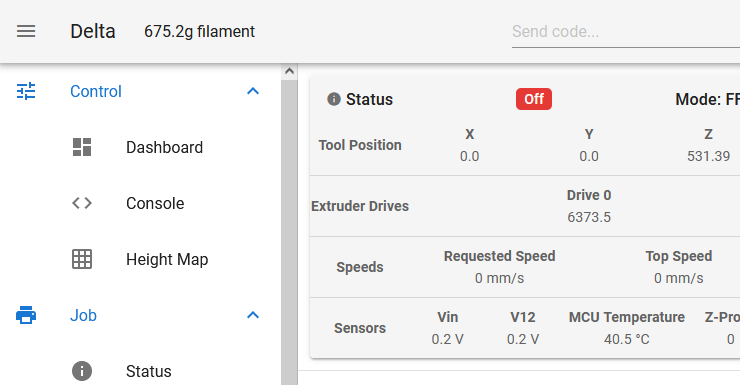

So the conclusion to all that is that I've got a calibrated 2nd order polynomial temperature compensation, but actually it's a small enough effect that I could do without. I'm using T-0.000470:0.000159 in my G31 config settings, which over the range 25 to 35C (where I normally am when probing the bed at the start of a print) that equates to a variation in trigger height of only 0.007mm (between max and min), or about three microsteps.

Workings

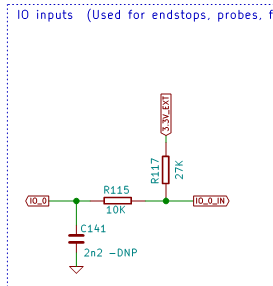

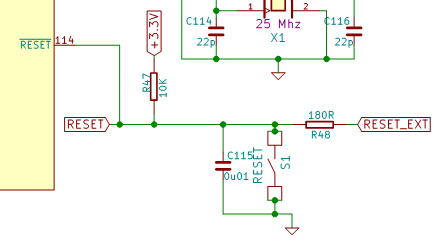

The rest of this is what I used to conclude the above

This is my macros that do the calibration (you need both because probeTcalib calls probe3), note that there are configuration variables in the start of both that need setting (e.g. which sensor is the one in the pinda). As noted, I set a zero temperature coefficient for the probe before running this, but I think the lastStopHeight is reported without temperature compensation (i.e. the compensation is not applied to the stop height, it's applied to the probe offset value used to set the head coordinates) so that wouldn't actually matter.

probeTcalib.g

probe3.g

This is the spreadsheet that I use to analyse a single run, but I've taken out the 32000+ rows of temperature values to shrink the file size. If anyone wants my data I'm happy to email an example - the spreadsheet with the temperature log data is about 3.5MB.

probe T calibration - 20230304 - pruned.xlsx

This is the spreadsheet with multiple runs overlaid. The first two cases in this are with my ‘inaccurate’ homez , resulting in a ‘large’ shift (up to 0.06mm) the others are with my new homez and shift is about a tenth of that. (For information, my Z axis is 400 steps/mm, or 0.0025mm per step).

probe T calibration - combined limited.xlsx