Hot-End Thermistor Temperature calibration

-

Your point for an accurate thermometer is well taken but I'm not sure why you would need an oven/bath to do this. Air baths are notorious for thermal gradients (I used to help design and evaluate gas chromatographs). Pushing a well calibrated 1-mm (or smaller) diameter thermocouple probe through the heat-break and into the inside of the nozzle would be my approach.

I have a fourth suggestion:

- Publish thermistor Beta values for the 180-260°C range. I will try to put together some suggestions for these based on manufacturers' published R vs T tables.

This must be much better than using the Beta values published for a 0-25°C range.

Maybe this will be enough to eliminate the need for calibration.

-

@phaedrux Then a PT1000 could be adequate for any temp below 350ºC? I intend to be able to print in PLA, PETG, ABS....

-

@ignacmc yes, PT100 is great for lower temps too

-

@jay_s_uk Think I will be getting one and ditching the NTC100K....I suppose it does not have any disadvantage over a thermistor even at low temps

-

Just to throw another spanner in the works, temperature sensors will only the measure the temperature at the point where they are fitted and not necessarily at the point we are interested in. One can have a temperature gradient between the cold filament entering the hot end and the nozzle tip, with the heater and sensor fitted somewhere along that gradient. Furthermore, nozzles are often made from hardened materials which have different thermal characteristics to the block into which they are screwed. Also, the nozzle temperature can be affected by deflected part cooling air. Some time ago I attempted to measure this phenomenon https://somei3deas.wordpress.com/2020/05/21/the-effect-of-deflected-part-cooling-air-on-brass-and-steel-nozzle-temperatures/

My conclusion from all of this is that a temperature sensor will only give an approximate indication of the temperature that we are really interested in due to a number of factors in addition to the accuracy of the sensor itself. So calibrating a sensor to make it as accurate as possible is largely irrelevant as one will still need to print temperature towers in order to determine the optimum temperature to use, regardless of the absolute value reported by the sensor itself.

-

@ignacmc Yes, a PT1000 sensor will have far better absolute temperature accuracy than a thermistor, if you don't have a precise way to calibrate the thermistors.

Thermistors have very high resolution, and can be easily calibrated over a narrow temperature range. I make much of my scientific living working with system controlled to absolute temperatures within 0.01C. Thermistors are great for this, if you only need a 10 degree span and have access to a calibration lab. However, without calibration, tiny errors in the Hart-Steinhart coefficients add up to very large temperature errors when used over a wide span.

Starting with something very close to linear in the first place, and with a temperature coefficient which is set by the properties of a (nearly) pure element, such as platinum, results in a a sensor which can be used over a very wide range with no calibration. A PT100 or PT1000 sensor has lower resolution than a thermistor (it's very hard to read to 0.01C), but a much wider reliable span of readout. It should be within 1C over the entire temperature range from room temp to 300C or higher.

You should get a decent quality calibration resistor (0.1% accuracy) of about 2000 ohms, to check that the duet readback is working right in PT1000 mode. If it works out right, you will be in great shape. The reason I bring this up is that, for example, my duet2 board (1.02 revision) seems to have a significant charge injection problem on the scanning ADC. Putting the PT1000 on channel zero shows a significant (5C) offset at room temp, but putting it on channel 1 is fine. This is likely due to residual charge on the sample/hold capacitor left over from whatever port the ADC scanned before reading channel 0. I haven't looked into the software to see in detail, but it is a classical scanning A/D problem.

-

OK, everyone, thanks for the input - it was an interesting discussion.

I still feel strongly that to enter thermistor performance specifications based on 25°C operation is a bad idea when you want to control temperatures at 200°C and above. This was not meant to be a discussion about thermistors versus RTDs but how to get better performance when using thermistors.

My control board doesn't support the direct connection of an RTD so I would need to spend ~$20 on an amplifier/SPI interface, another ~$20 on the PT100 or PT1000 and then I would have to make modifications to my control board (like remove serial resistor). It's just not worth the cost or effort (or risk)! Even then, I would still need to calibrate it. If it was easy to connect an RTD, I wouldn't have started this posting.

The point on the nozzle temperature being different from the thermistor temperature is well taken which is why I'm calibrating temperature based on measurements from inside the nozzle itself.

Guess, I'll make my own arrangements to calibrate my system and it looks like there's no interest in me sharing these results or solutions.

Good to know.

Thanks again.

-

@wombat37 said in Hot-End Thermistor Temperature calibration:

Guess, I'll make my own arrangements to calibrate my system and it looks like there's no interest in me sharing these results or solutions.

I, for one, would very much like to hear about what you find.

Frederick

-

@wombat37 said in Hot-End Thermistor Temperature calibration:

it looks like there's no interest in me sharing these results or solutions.

Not at all. Please consider the initial reactions here as simply a part of peer review. You've been given the alternatives to compare with in price performance. Making a thermistor better is a great idea since they are so widely used. If you can come up with a way to calibrate them that is easy, repeatable, and cheap, that's very worthwhile.

-

@wombat37 I do have a suggestion for thermistor calibration, near the 200C working temperature. If you build a little offline metal block, with a heater, your thermistor, and a little extra well in it, and insulate it thoroughly so that the only exposed bit is the well, so you can see into it, you could use 63/37 eutectic solder as a melting point standard. You would just slowly step the temperature up a bit at a time, with a piece of the solder sitting in the bottom of the well. The solder will melt at 183C, so you can calibrate your thermistor to get that right.

Basically, you would set alpha, the base resistance of the thermistor, to get room temperature right, and then adjust beta to get the 183C point right. Then, move the thermistor back to you printer, and it should be pretty close to right over the entire usage range. This method will make it best at room temp, and near 183C, with somewhat larger errors in between those two points, and at higher temps, but it's probably a lot better than just using the book value of beta.

-

I used pure metallic tin last time - which worked well (m.p 231.9°C). I can also look at the boiling points of silicone oil or something like dodecane. Solder probably won't be very pure and as an alloy, its composition may vary. Also, it may not have a sharp melting point like pure substance. However, we're not during purity analysis (melting points can be used as a measure of purity) here so a few degrees error probably won't hurt.

Looks like there is some interest in this topic, so I will keep looking into this. I'll post up an algorithm over the next few days and see what people think of that and get some more ideas.

-

@wombat37 I think if you buy the real 63/37 eutectic, it is fairly tightly controlled since it is a mil spec. Also, since the temperature is at a minimum there, it is flat over a moderate range of compositions (quadratics are nice). If you have pure tin, though, that is fine, too. It's just a bit less available than 63/37. If you want to get fancy, do tin and solder, and then you can fit the cubic coefficient, too!

Boiling points are harder, since a lot of things superheat before they boil.

-

I didn't realize that these solders were eutectic. Most alloys typically melt over a range of temperature.

Regarding tin, it's not too expensive to get reasonable purity - perhaps less than the solder you recommend:

I agree about boiling points being a bit erratic because of superheating. However, many things supercool so care has to be taken to determine melting points as well.

I feel it would be enough to use just one calibration point (in addition to ice or steam) for the digital thermometer for a narrow range of operating temperatures - say, something in the 180 to 260°C range. I don't really care if the temperature below that is accurate - it's never used except to tell me that the hot-end is now cold enough to touch. There's an easy way to find that out anyway. We can still do the cubic coefficient determination by taking more measurements with the thermocouple probe. Solving 3rd-order equations may be a bit challenging for RRF macros, though.

-

@wombat37 Yes, on your comment about not needing extra calibration points. Extras would be just for fun. The working temperature range is fairly narrow, and pure tin is close enough to the middle of it to give really good results. Supercooling is, of course, why one uses either melting points (with slow upward stepping), or equilibrium freezing points, where you keep the melt partly melted and partly crystalline. That method is usually considered to be the gold standard, either via the water triple point at 0.01C, the gallium freezing point at 29.7646C, or various higher-temperature metals. These are uses as reference points on the official ITU temperature scale.

I think the thermistor calibration built in accepts the cubic Hart-Steinhart coefficient already, so it is no extra work to put it in, if you want. You have to do the solution for the coefficients on your computer, not in RRF.

-

@mendenmh I had a great idea that came to me overnight and maybe this is what you had in mind all along. Solder and tin can come in reels of wire. This wire could be pushed directly into the hot end. If the temperature of the hot end was (very) slowly increased, there would come a point at which the metal would melt and the wire could be extruded. This would be at the melting point of the wire and so the temperature of the hot-end would now be known - at the exact location it's needed. Hopefully because the alloy is eutectic this measurement will be very precise and repeatable. I'm also thinking that, unlike filament, the molten metal will have low viscosity and will quickly drip out of the nozzle.

I've been hung up on how to calibrate and use thermocouple probes etc. to make temperature measurements but this extrusion approach would eliminate all that crap and provide a very direct, cheap and simple means of performing a single point temperature calibration without needing any additional equipment and would work for any type of temperature sensor on any printer.

Of course, all depends on the details.

I'm thinking of something along the lines of:

- Cut a short length of solder wire of suitable diameter - say 100mm x 1.5mm

- Heat up the hot end

- Remove any filament from the hot-end and perhaps fit a new nozzle

- Set the hot-end temperature to about 20°C below the melting point of the solder

- Push one end of the solder wire into the hot-end through the heat-break

- Attach a weight to the other end to assert a sustained force on the wire

- Run a macro to increase the hot-end temperature at a slow rate - say 1°C/min

- Look for molten solder emerging from the nozzle and note the temperature reading when it does.

- Use the read temperature and the known melting point of the solder to make a correction to the hot-end temperature calibration

- Catch the liquid metal in a suitable dish or tray

- If we could find other solder/metals/alloys that melt at different temperatures we could as you say, determine the cubic coefficient, C, for a thermistor

- If we wanted to automate this, we could locate an electrode immediately beneath the nozzle to detect the presence of molten metal at that point and feed that event back into the running macro.

Some questions:

- Will the rosin in the solder affect the result?

- Will the solder corrode/dissolve the metal in the nozzle and affect the result and/or destroy the nozzle? What type of nozzle would be best? Brass, copper, steel, stainless steel, titanium - they all seem to be available.

- Will solder be left in the nozzle after the test making it useless for later work with filament? Could it be removed by a wash of filament? Perhaps we would need a dedicated nozzle for these temperature tests.

- What diameter nozzle orifice would be best? 1mm?

I may try out some quick tests as a sort of proof of concept exercise. But this looks like a good way to go and thank you for your input that led to this.

-

@mendenmh Thank you for your detailed explanation...it exceeds by far my humble knowledge

I have just received a PT1000 (2 wire) from trianglelabs, that I intend to use as a direct replacement of my current HT-NTC100K B3950 thermistor. Once correctly configured in config.g, I hope to not to have to battle again with B and C Thermistor coefficients.Regarding the calibration resistor, I have currently installed a Duet 2 Wifi (although I keep a Duet 3 Mini5+ as reserve). I have not exchanged the former for the latter because It seems there is not much to gain with the change.

The PT1000 arrived with three 1K 0.1% SMD resistors to replace the default 4,7K pullup on the Duet2, but unless I am convinced that is absolutely mandatory I would prefer not to try to solder on the board, specially as I don't have SMD soldering experience.

I have heard somewhere on this forum that even on a stock Duet 2 Wifi the reduced resolution is more than enough for FDM printer purposes. If not, I could consider to replace the board with the Duet 3 Mini since its pullup resistor happens to be a more adequate 2.2 K.

I don't have any 2K calibration resistor at hand, I would have to order it on RScomponents or similar and for the use I am going to give it I would prefer to try to go without it.

-

@ignacmc I did not replace the resistors on my Duet2 v1.02, either. However, I did note that there was a significant (few degrees at room temp) error on ADC channel 0, but not on channel 1. I swapped the choice of channels, and everything works very well. This is probably an effect known as charge injection, which affects scanning ADCs. If the previous voltage read was very different from the next, some of the reading will bleed over due to stored charge on the sampling capacitor. Reducing the feedback resistor prob ably helps this. Apparently the differential between channels when I moved the pt1000 to channel 1 was small enough that it was fine. This would mostly show up as a fixed offset, anyways. I really like having a temperature sensor that I can both trust over the whole range, and interchange, if needed, without recalibrating.

-

@mendenmh I am going to directly install the PT1000 and configure it. Then I will cross my fingers expecting not to have the same problem as you

Thanks for your advice and your expertise in this kind of sensors. As a Mechanical Engineer I am stepping on foreign ground, haha!

Thanks for your advice and your expertise in this kind of sensors. As a Mechanical Engineer I am stepping on foreign ground, haha! -

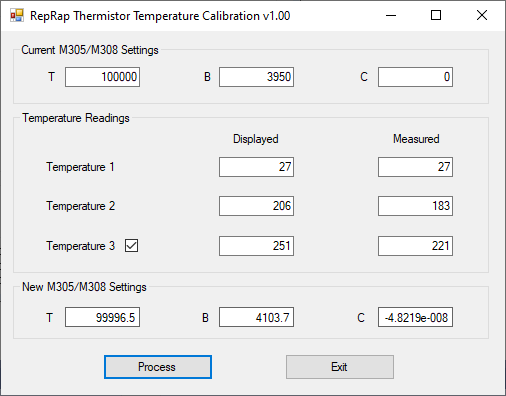

@wombat37 Following on from my last posting, I have now completed my tests and believe that I now have a quick, easy, cheap, reliable and accurate method of calibrating hot end thermistor temperature control (with all three Steinhart Hart coefficients) that should work with any printer without any disassembly or modification or even the need for a thermometer.

With a cheap unbranded thermistor I believe that I can achieve accuracy within 1°C across the full working range.

I'm currently in the process of writing up a white-paper on this. If anyone is interested in beta-testing this method, let me know.

-

I’m interested

only worked out the first 2 of the 3 using this method

https://forum.duet3d.com/post/191523