[3.6.0-beta.3] DWC connection issues Duet2 / Duex5

-

@chrishamm @droftarts

it was to early - it will crash even with only 2 DWC connections opened. I also tried to poll rr_reply ever second but even that is not enough. If you have bad luck and two DWC sessions open, it will crash on the first or second try.EDIT:

same for

M409 K"" F"d2" -

@timschneider Thanks for the report. I've logged this at https://github.com/Duet3D/RepRapFirmware/issues/1073

-

@droftarts

@chrishamm thank you for open up a bug report for it, but i'm almost certain that this is just one bug out of at least two.somehow the rr_reply can add up and even a quit short and simple output can crash the webserver / frontend.

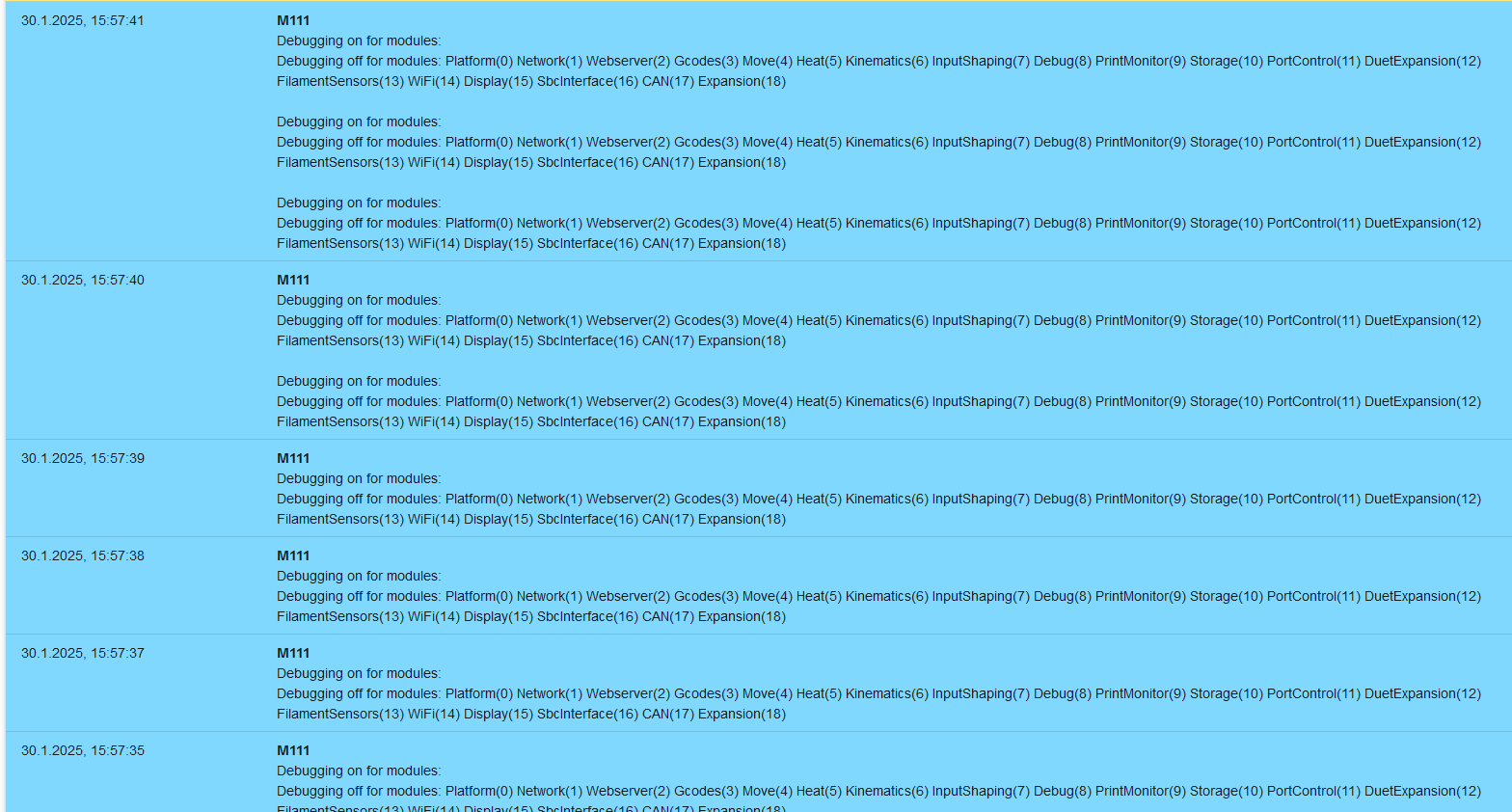

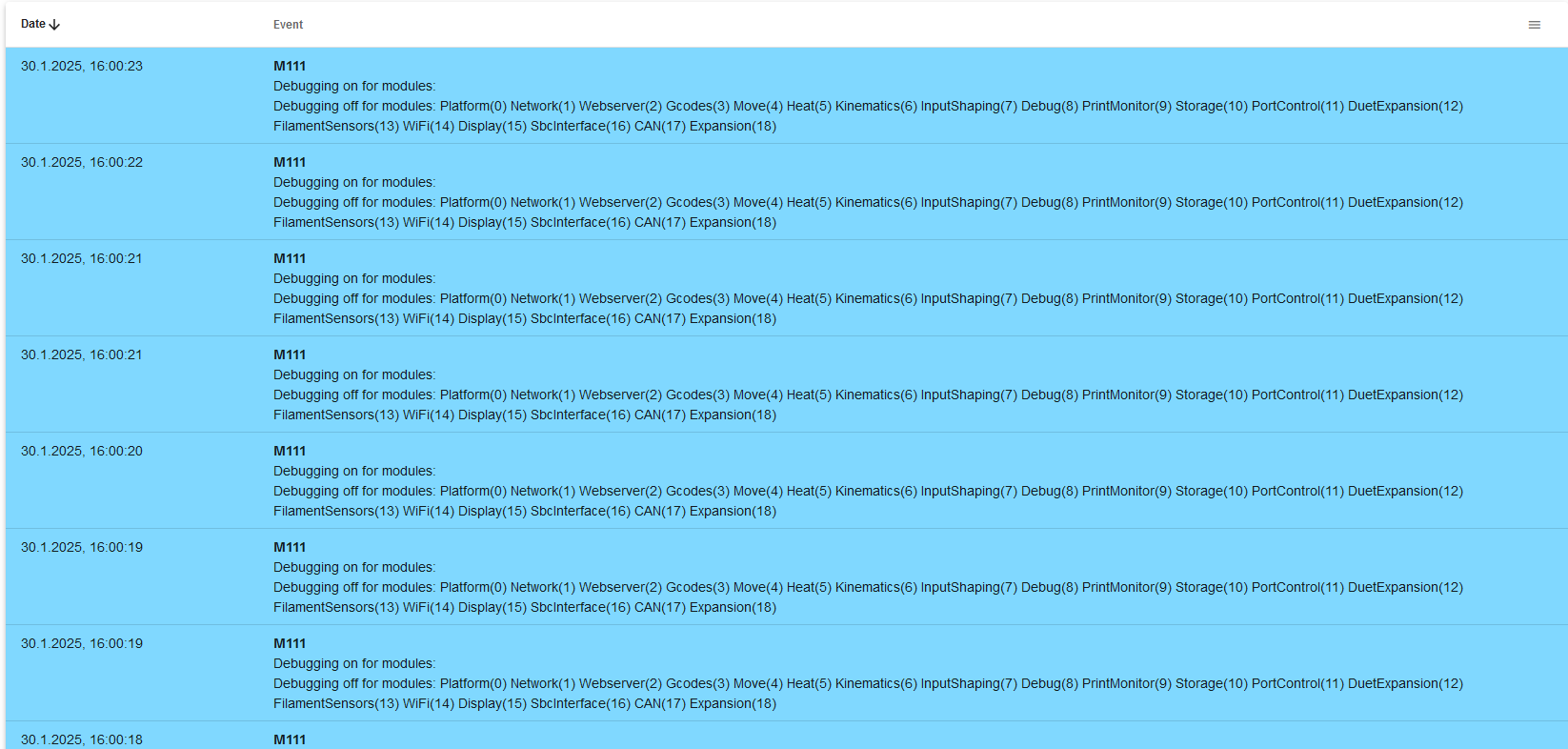

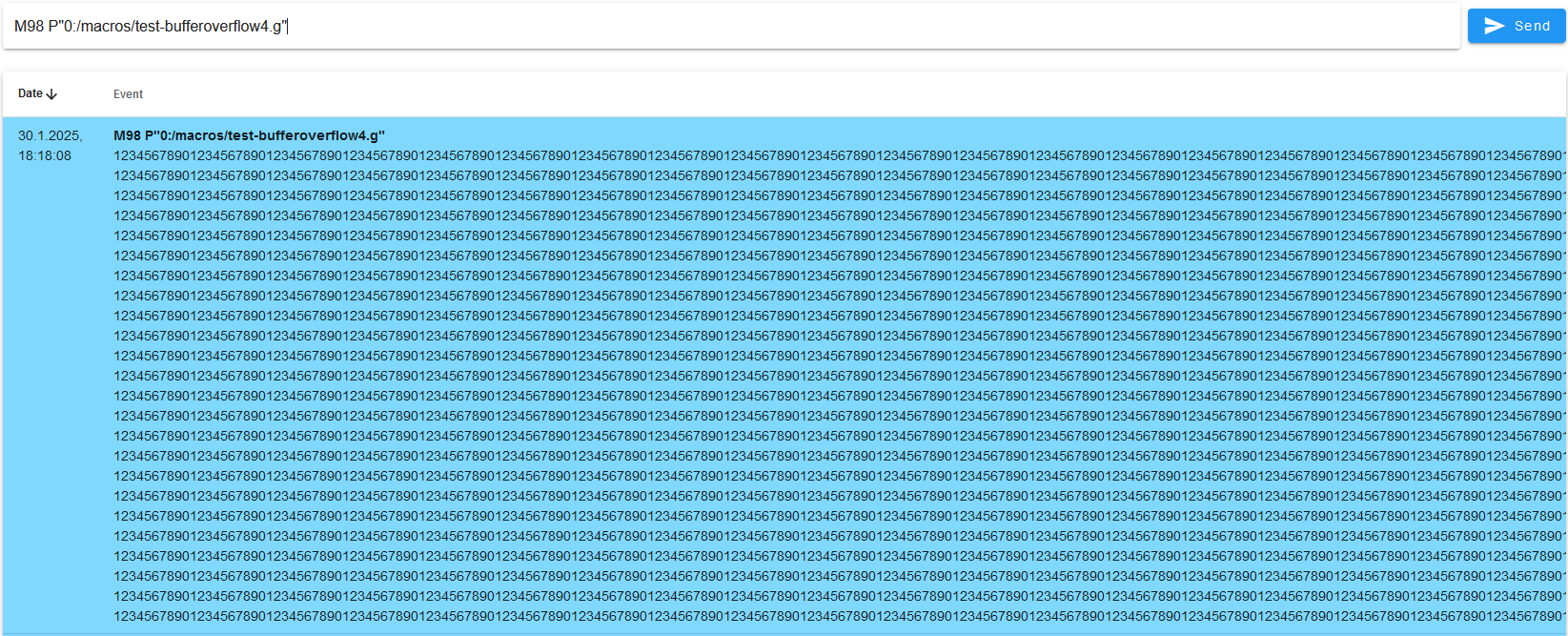

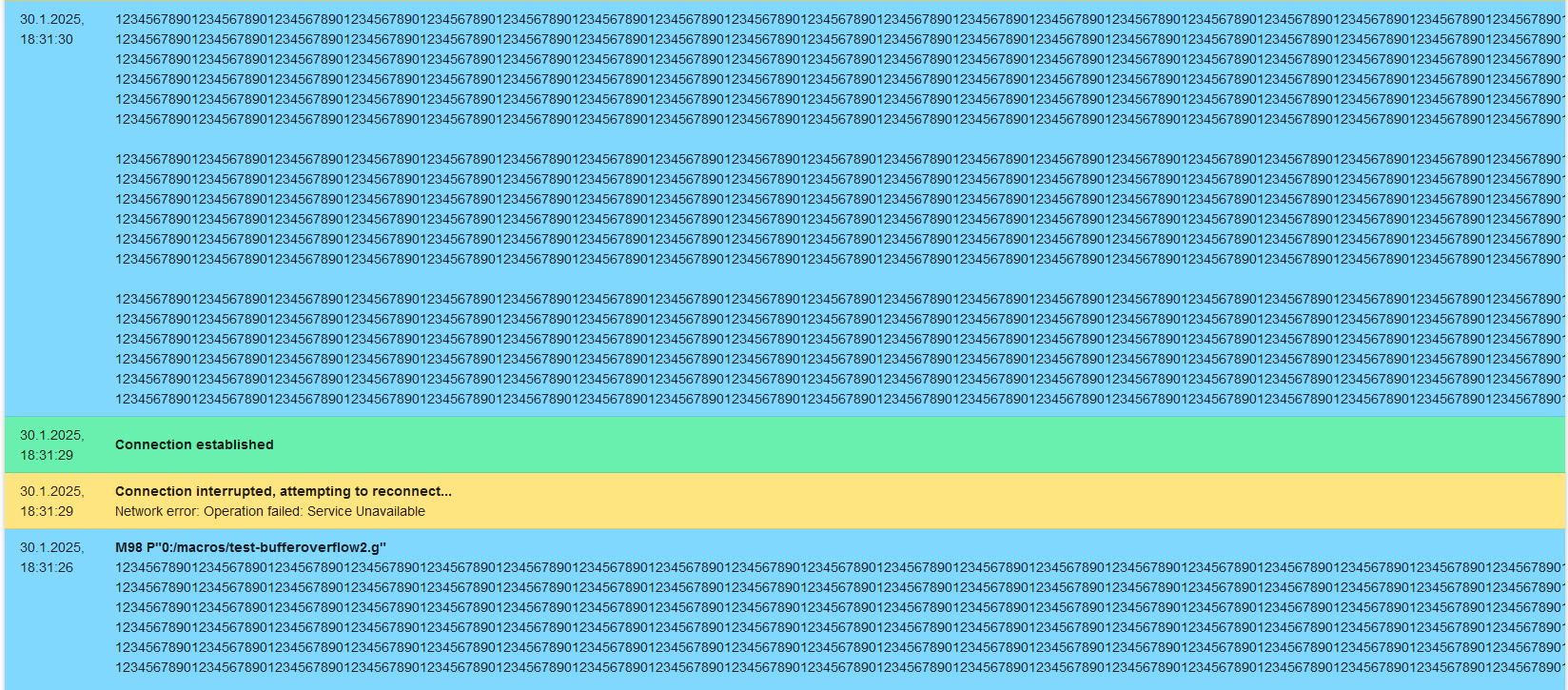

the following output shows the output in the console when at least two clients are connected, in this case two DWC sessions

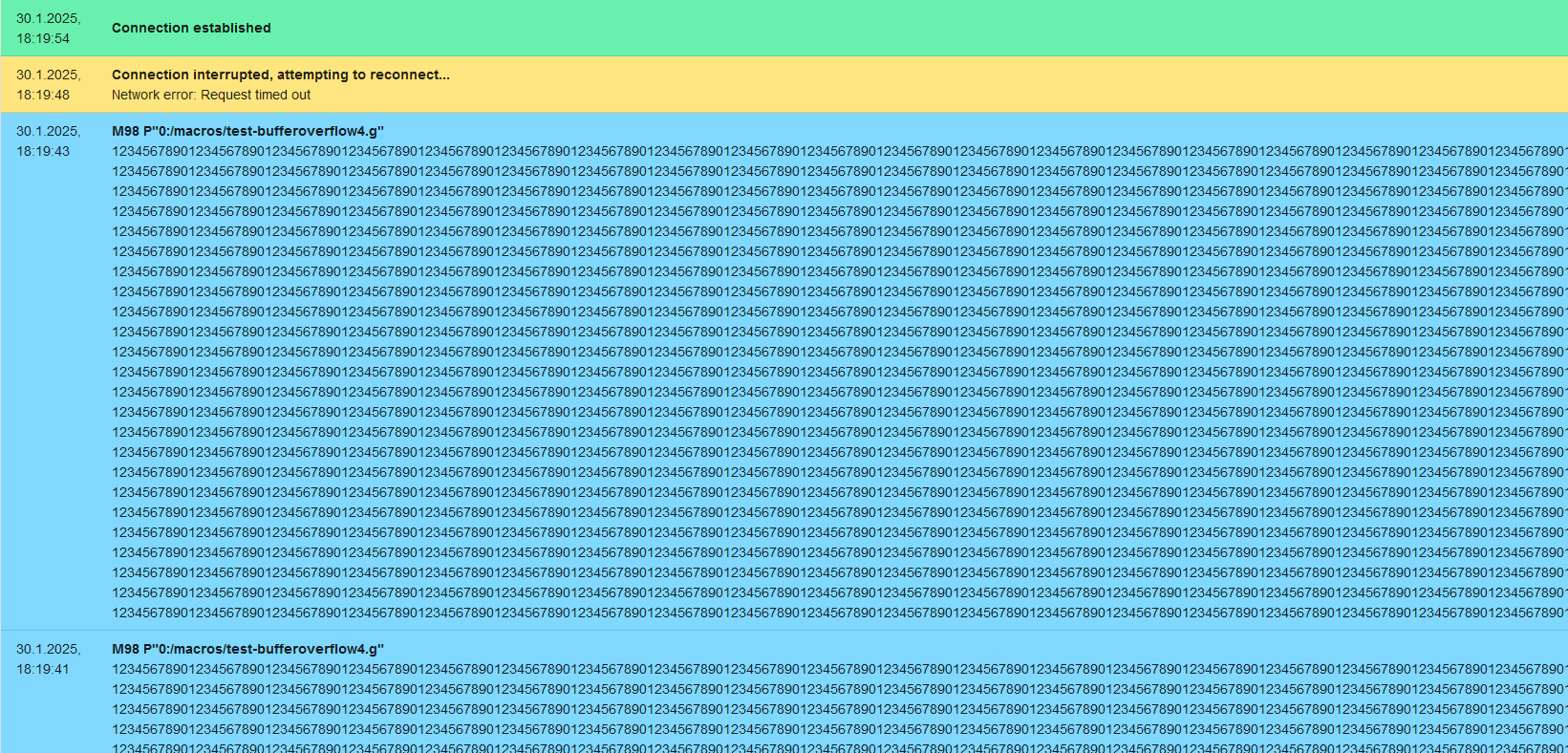

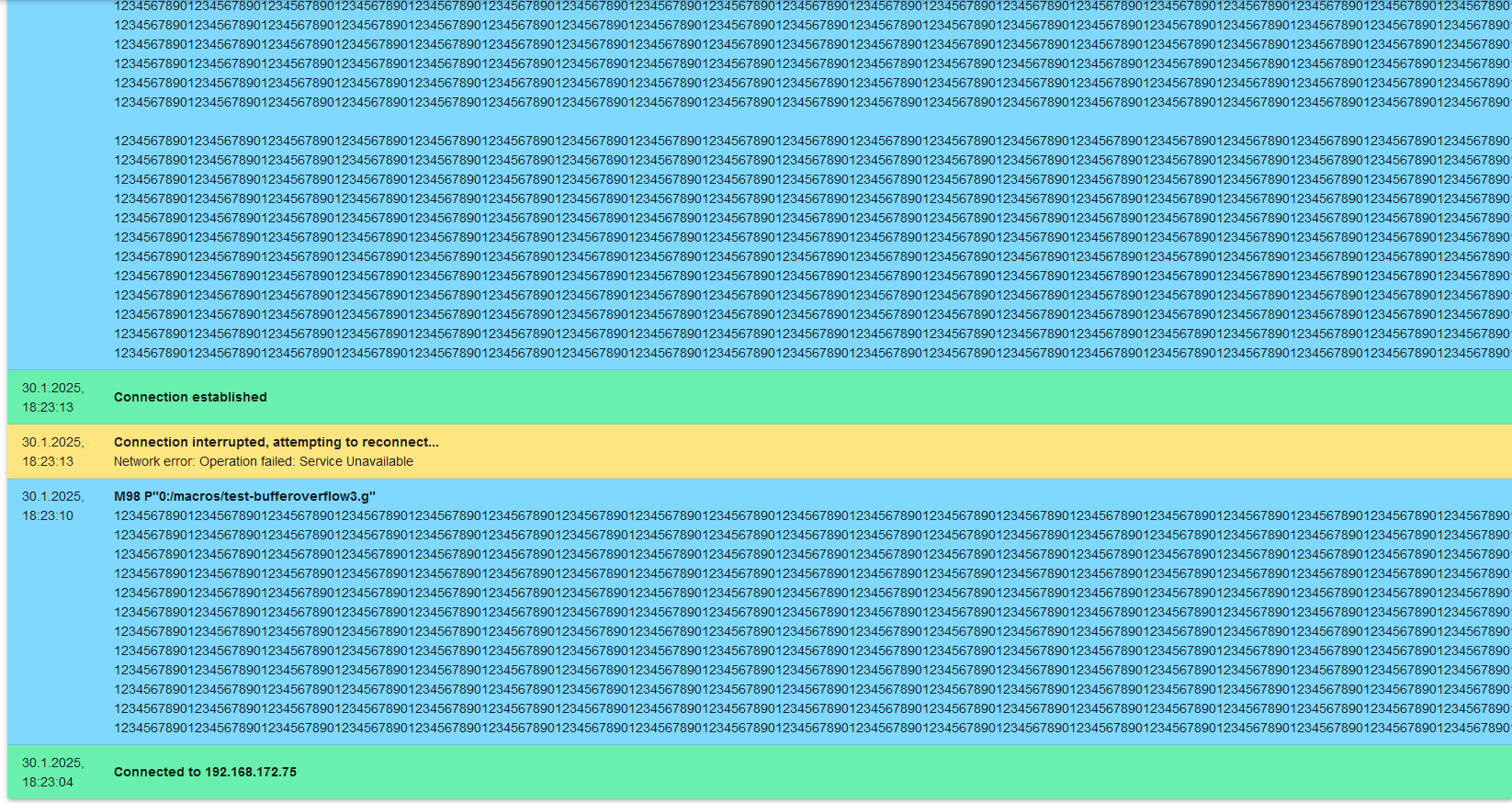

after adding up, it will kill all open sessions

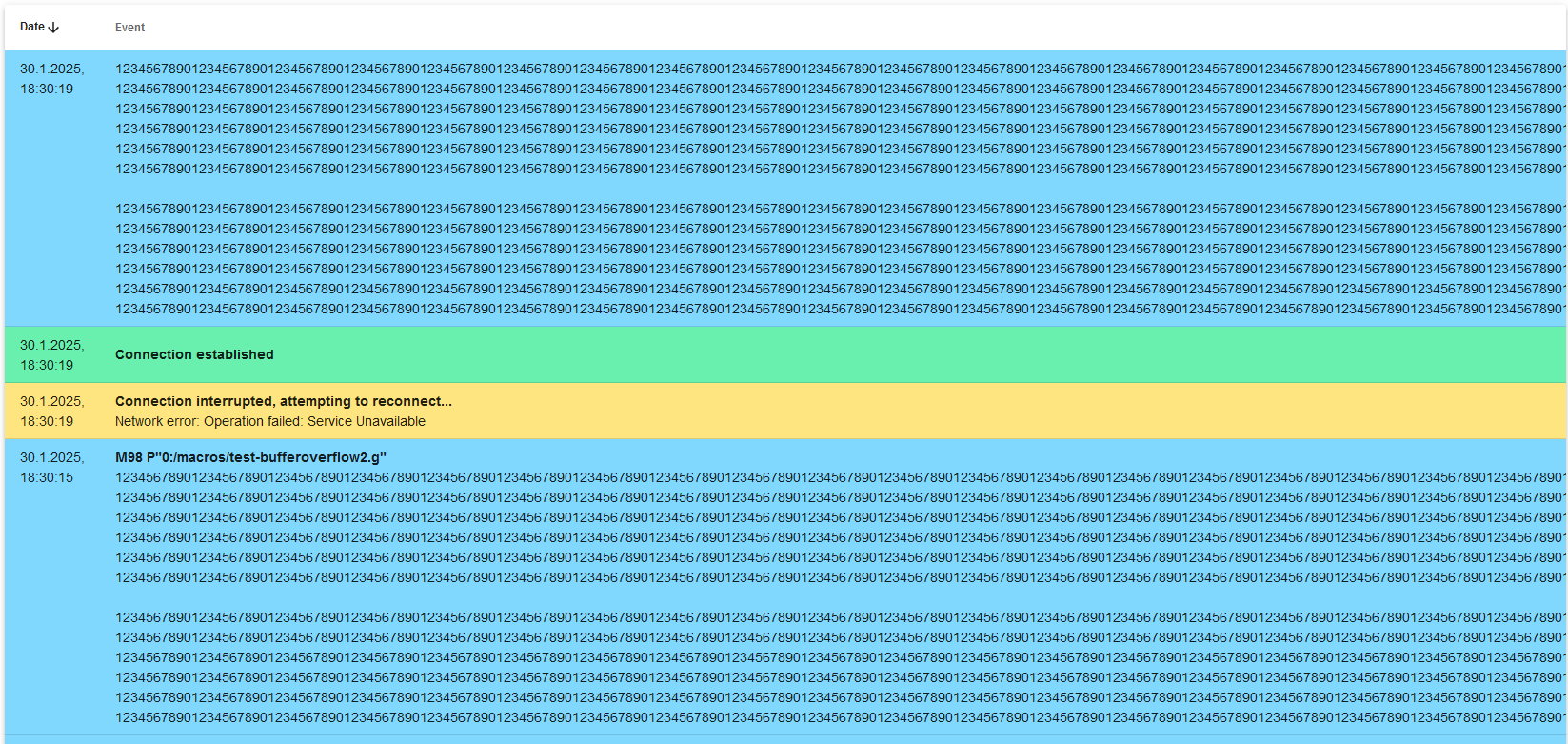

if only one DWC session is open the output looks like the following and it is impossible (via DWC) to add up the output

We use a reverse proxy to connect to duet and I observed the above mentioned behavior in the past, but alwas thought it is related to the reverse proxy and was not paying attention to it. The above shown screenshots are NOT connected to the reverse proxy - I was directly connected to the duet.

EDIT: I've checked if i'm able to add up the output in DSF setup or crash the frontend - and it was not possible.

-

@timschneider DSF has a lot more memory available so it's very unlikely to get it to crash. If long G-code replies are not released quickly enough in standalone mode, that can lead to output buffer starvation which makes the whole webserver unresponsive (for a few seconds at least). That's why I proposed to call

rr_replyrelatively frequently from "bot" clients. The other issue which had to do with failed SPI transfers is being investigated. -

@chrishamm This explanation does not seem to explain the behaviour shown above.

In the above example there are only two DWC sessions open and the overall output is just about 1100 characters.

I did another test with the following macros:

M98 P"0:/macros/test-bufferoverflow.g"

echo "123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890" echo "123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890" echo "123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890"M98 P"0:/macros/test-bufferoverflow2.g" -> two times the content of test-bufferoverflow.g (6 times echo with 210 chars)

M98 P"0:/macros/test-bufferoverflow3.g" -> two times the content of test-bufferoverflow2.g (12 times echo with 210 chars)

M98 P"0:/macros/test-bufferoverflow4.g" -> two times the content of test-bufferoverflow3.g (24 times echo with 210 chars)Executing macro M98 P"0:/macros/test-bufferoverflow4.g" will work

Executing two times macro M98 P"0:/macros/test-bufferoverflow4.g" will fail

Executing two times macro M98 P"0:/macros/test-bufferoverflow3.g" will fail

Executing two times macro M98 P"0:/macros/test-bufferoverflow2.g" will sometimes fail

Executing three times macro M98 P"0:/macros/test-bufferoverflow2.g" will fail

so it looks like it is realted to the memory, but it is no "out of memory" due to the size of the output.

with only one DWC session i'm able to get it crashing with two times M98 P"0:/macros/test-bufferoverflow4.g" - but not with the other macros.

The problem from my side is, that when two clients are connected and issue a G-Code with response, this sometimes crashes the webserver and both DWC connections are reconnecting. From theorie it should work as long as there are output buffers, but somehow it appears they get wasted somewhere.

Output of M122

30.1.2025, 18:36:37 M122 === Diagnostics === RepRapFirmware for Duet 2 WiFi/Ethernet version 3.6.0-beta.3 (2025-01-16 19:09:12) running on Duet WiFi 1.02 or later + DueX5v0.11 Board ID: 08DJM-9178L-L4MSJ-6J1FL-3S86J-TB2LN Used output buffers: 2 of 26 (26 max) === RTOS === Static ram: 23520 Dynamic ram: 68716 of which 220 recycled Never used RAM 27540, free system stack 182 words Tasks: NETWORK(1,ready,16.6%,195) HEAT(3,nWait 5,0.1%,283) Move(4,nWait 5,0.0%,361) DUEX(5,nWait 5,0.0%,23) MAIN(1,running,82.8%,712) IDLE(0,ready,0.5%,29), total 100.0% Owned mutexes: === Platform === Last reset 30:48:46 ago, cause: power up Last software reset at 2025-01-27 15:25, reason: User, Gcodes spinning, available RAM 28108, slot 2 Software reset code 0x0003 HFSR 0x00000000 CFSR 0x00000000 ICSR 0x00400000 BFAR 0xe000ed38 SP 0x00000000 Task MAIN Freestk 0 n/a Error status: 0x0c MCU temperature: min 28.7, current 29.2, max 30.3 Supply voltage: min 23.8, current 24.0, max 24.3, under voltage events: 0, over voltage events: 0, power good: yes Heap OK, handles allocated/used 99/7, heap memory allocated/used/recyclable 2048/1484/1348, gc cycles 5713 Events: 0 queued, 0 completed Date/time: 2025-01-30 18:36:30 Slowest loop: 17.84ms; fastest: 0.18ms I2C nak errors 0, send timeouts 0, receive timeouts 0, finishTimeouts 0, resets 0 === Storage === Free file entries: 8 SD card 0 detected, interface speed: 20.0MBytes/sec SD card longest read time 8.5ms, write time 0.0ms, max retries 0 === Move === Segments created 0, maxWait 0ms, bed comp in use: none, height map offset 0.000, hiccups added 0/0 (0.00ms), max steps late 0, ebfmin 0.00, ebfmax 0.00 Pos req/act/dcf: 0.00/0/0.00 0.00/0/0.00 0.00/0/0.00 no step interrupt scheduled Driver 0: standstill, SG min n/a Driver 1: standstill, SG min n/a Driver 2: standstill, SG min n/a Driver 3: standstill, SG min n/a Driver 4: standstill, SG min n/a Driver 5: standstill, SG min n/a Driver 6: standstill, SG min n/a Driver 7: standstill, SG min n/a Driver 8: standstill, SG min n/a Driver 9: standstill, SG min n/a Driver 10: Driver 11: === DDARing 0 === Scheduled moves 0, completed 0, LaErrors 0, Underruns [0, 0, 0] Segments left 0 Code queue is empty === Heat === Bed heaters 0 -1 -1 -1, chamber heaters -1 -1 -1 -1, ordering errs 0 === GCodes === Movement locks held by null HTTP is idle in state(s) 0 Telnet is idle in state(s) 0 File is idle in state(s) 0 USB is idle in state(s) 0 Aux is idle in state(s) 0 Trigger is idle in state(s) 0 Queue is idle in state(s) 0 LCD is idle in state(s) 0 Daemon is doing "G4 P100" in state(s) 0 0, running macro Autopause is idle in state(s) 0 === Filament sensors === check 0 clear 399166717 Extruder 0: pos 2160.00, errs: frame 0 parity 0 ovrun 0 pol 0 ovdue 0 === DueX === Read count 0, 0.00 reads/min === Network === Slowest loop: 21.90ms; fastest: 0.07ms Responder states: HTTP(0) HTTP(0) HTTP(0) FTP(0) Telnet(0) HTTP sessions: 1 of 8 === WiFi === Interface state: active Module is connected to access point Failed messages: pending 0, notrdy 0, noresp 0 Firmware version 2.2.1 MAC address bc:dd:c2:31:9a:40 Module reset reason: Turned on by main processor, Vcc 3.46, flash size 4194304, free heap 42496 WiFi IP address 10.42.0.2 Signal strength -21dBm, channel 11, mode 802.11n, reconnections 0 Clock register 00002002 Socket states: 0 0 0 0 0 0 0 0 -

@timschneider if you enable all Move debugging in recent versions of RRF then huge amounts of debug output are generated, which means all bets are off. M111 should only be used to enable debugging selectively, using the P and D parameters so select the modules and subsystems to debug.

Duet 2 is somewhat memory constrained so it has less output buffer memory available than Duet 3 boards. That said, it does appear from the output you have posted that there may be a particular issue with the interface to the WiFi module when there is a lot of data to output. This is on my list to investigate.

-

@dc42 @chrishamm

thank you both for your time and patience.

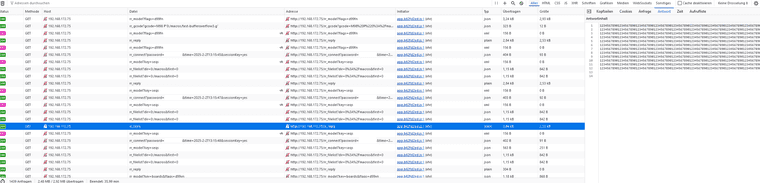

I moved forward with the knowledge of the low memory issue and reprogrammed the python client to be a fully fledged digital twin of the duet printer as per object model. In order to archive this I followed the description of M409 and implemented theaandnexthandling aswell as theseqshandling. So what I do now is, poll the rr_model endpoint with key=seqsto keep track of the sequence numbers and keep the load on the duet as low as possible. If a change happens, it will act accordingly, e.g. fetch a reply or partially fetch the updated object model recursive with max depth oflevel + 1, again to keep the load as low as possible.but even that didn't solved the problem - it appears in some cases to make it even worse (i guess two output buffers per json response)

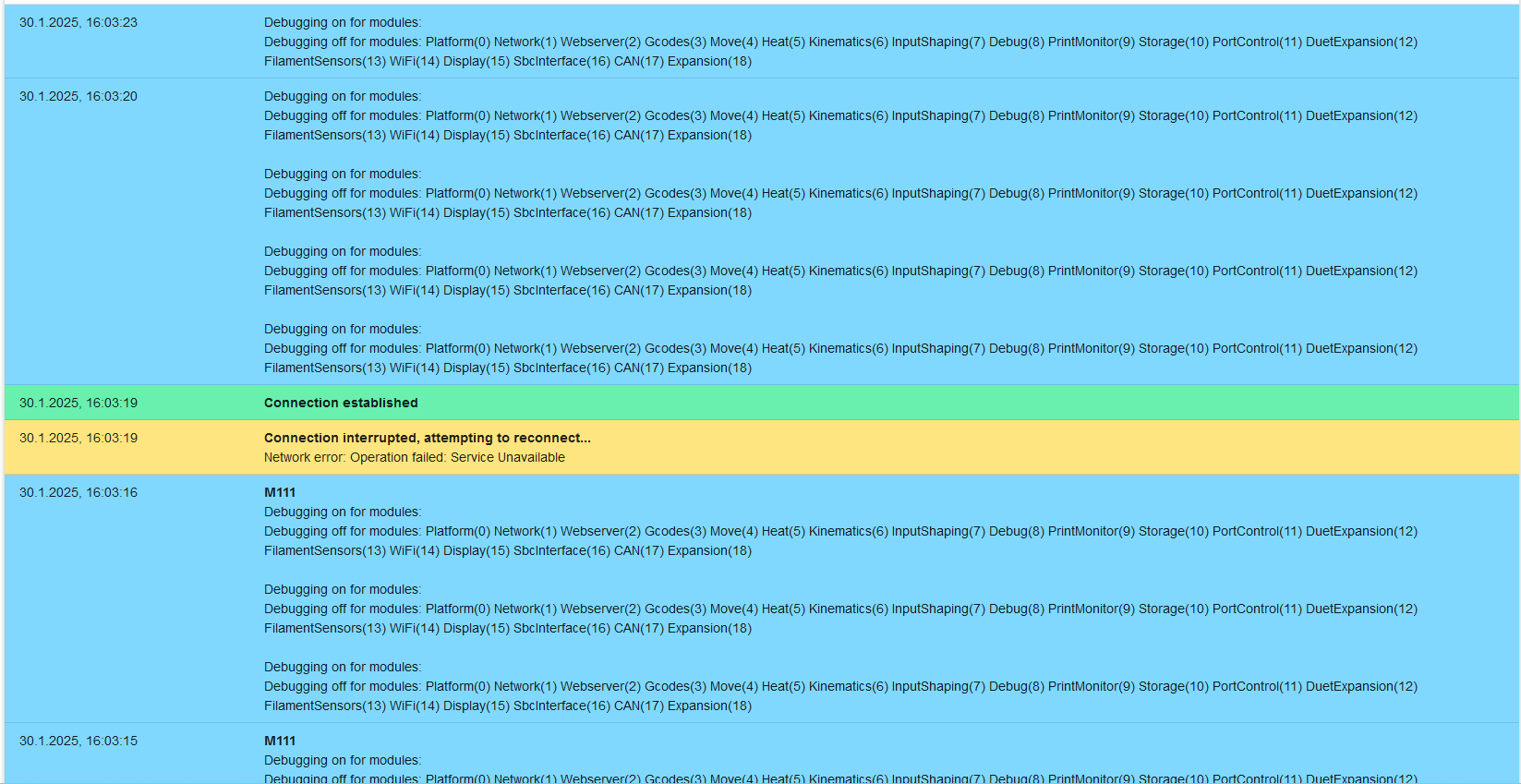

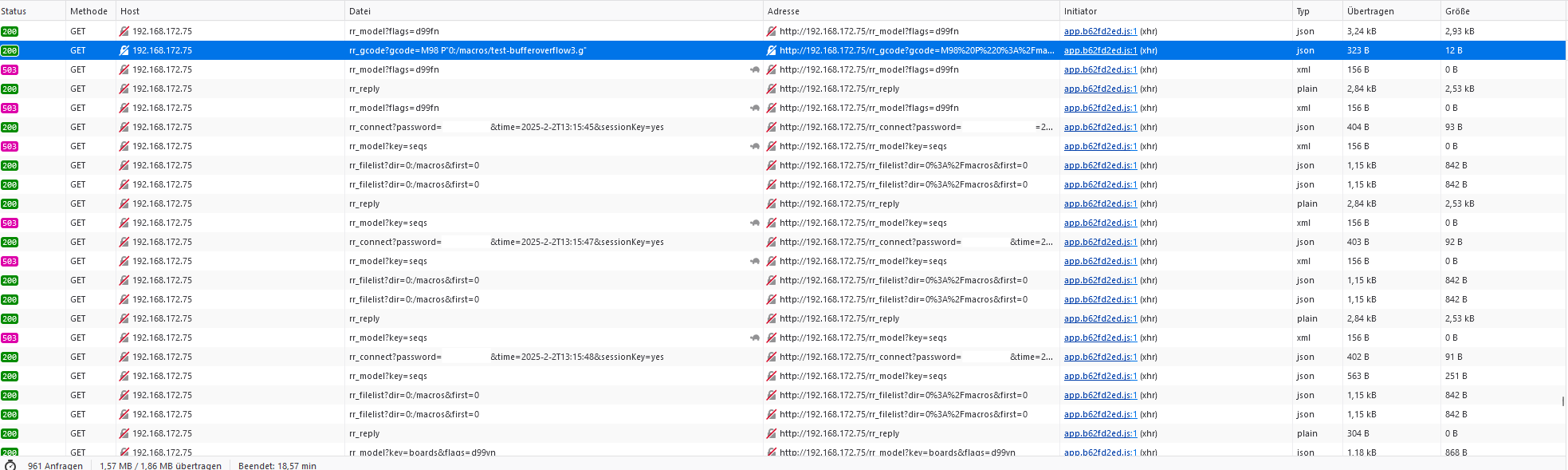

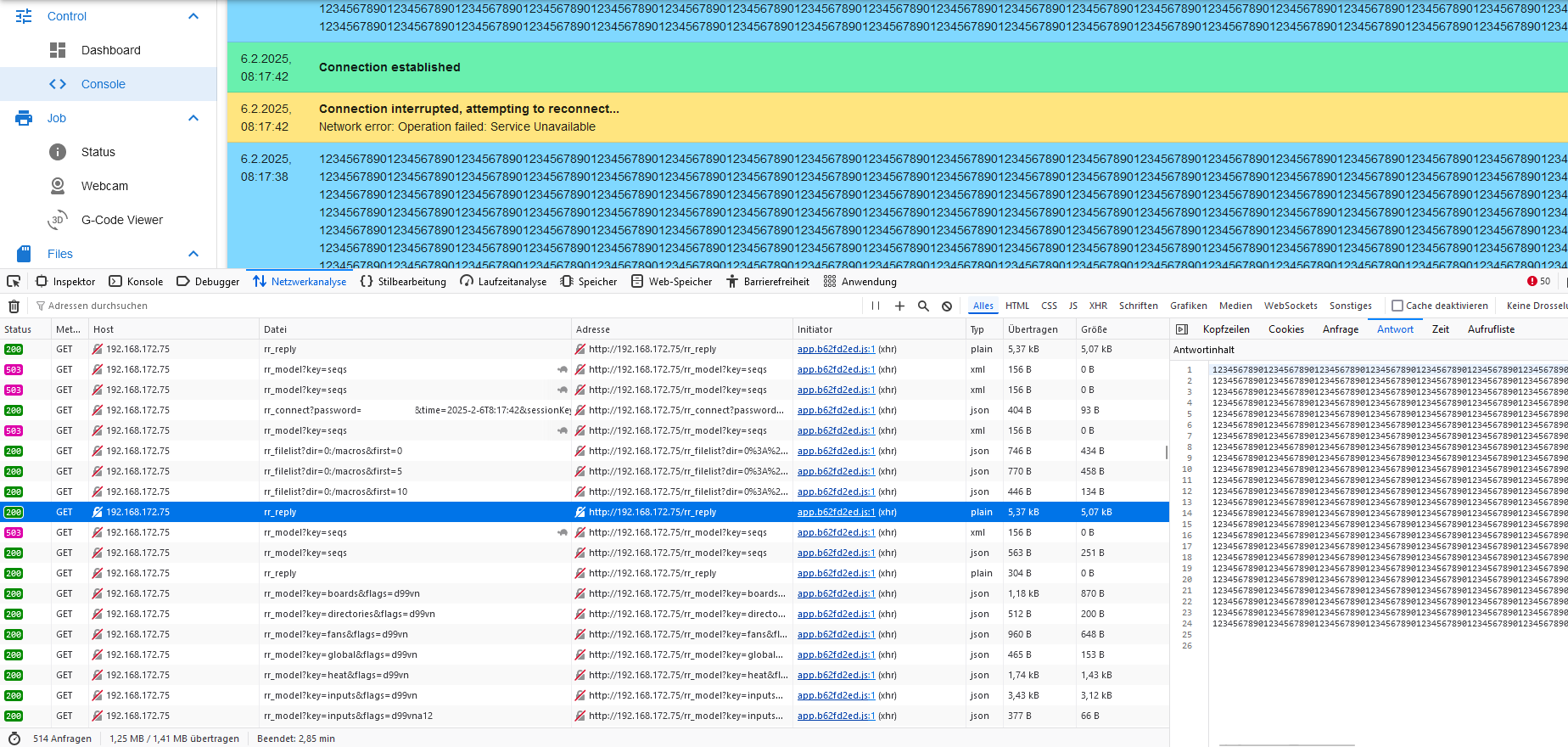

In the following there is one DWC Client and one python client connected.

Anyhow I wanted to understand the problem and at least find a workaround, but I was not able to.

What I found was that on an error - DWC is doing a reconnect straight, which makes it even worse, as this will increase the number of connected clients and will leave gcode replies unreceived and the buffer allocated, I guess?

Using the same macro as above. Directly after sending the command, the following rr_model request in DWC will fail - python client will also receive a HTTP 503 busy and will backoff 5 sec. before even trying to do anything (leaving the session open / timeout=8s - and leaving the reply probably not fetched for this session / due to the 503 busy response). DWC will open a new session and the internal connected clients count is increased, still keeping the buffer allocated. DWC tries to fetch a rr_model and will fail again with HTTP 503 - again reconnect / client count +1 / buffer allocated and so on ...

The last rr_reply in the timeline is empty - and the reconnect and following rr_model request are successfull.

Maybe it is possible to change the behaviour of the DWC reconnect mechanism and check if the HTTP response is 503 - wait and do not reconnect and on the duet side if multiple json requests fail - drop pending gcode replies? Or as an alternative always serve the rr_model seqs requests to give a client a chance to consume rr_reply to free up output buffers. Just randomly polling the rr_reply endpoint will steal the response from DWC, so thats also not an option.

-

@timschneider

wow that did the trick, when the python client receives an 503 it will just query the rr_reply immediately until the response is empty to free up buffers on the duet - DWC will not reconnect and everything works even with

even with M98 P"0:/macros/test-bufferoverflow3.g", but DWC will sometimes not receive the output of that macro as the python client consumed both replies.It will even survive

M98 P"0:/macros/test-bufferoverflow4.g"Using this method having 6 concurrent DWC connections open and one python client is no problem anymore - it will even work with the

M98 P"0:/macros/test-bufferoverflow4.g"Edit: there is still some problem in data and memory management, sometimes i do receive partial data

Response payload is not completed: <ContentLengthError: 400, message='Not enough data for satisfy content length header.'>due to connection closing mid transmitting from duet to the reverse proxy. -

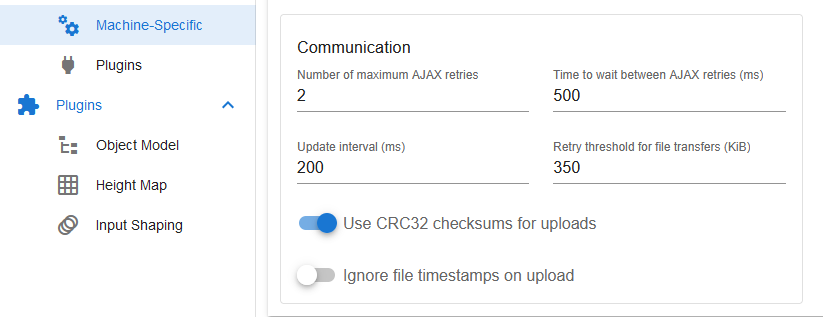

@timschneider What do you have "AJAX retries" in the DWC settings set to?

-

-

@chrishamm

Damn! This really looks promising!https://github.com/Duet3D/RepRapFirmware/commit/a3498d31aa746d0486e5a109c091f15a8b8e41c0

This could explain all the issues we and our customers have been facing – what could be described as DWC instability.

We use object labels in every print, most of our customers have a large file list, most print jobs take longer than a day, often 3-5 days. I am really excited and looking forward to trying this out! -

@timschneider Please give it a try then. Do you need a firmware binary for testing?

-

@chrishamm if you have one at hand, that would be nice. If not, I can compile my own withing the next days.

-

@chrishamm

Hi Chris,Thank you for your prompt reply and the in-depth solution. The fix does indeed help alleviate the ‘M122 M122 M122’ issue. Calling ‘M122 M122 M122 M122 M122 M122 M122’ sometimes still causes the web server to crash (will not recover), and calling ‘M98 P"0:/macros/test-bufferoverflow4.g"’ twice resets the connection. With 3 open sessions, all DWC.With only two open DWC sessions it can still recover the

M122 M122 M122 M122 M122 M122 M122. CallingM98 P"0:/macros/test-bufferoverflow4.g"will still reconnect DWC.

With only one session it will just reconnect with the M122 bomb and I'm unable to get it disconnected with theM98 P"0:/macros/test-bufferoverflow4.g".So I think part of the problem is fixed and it feels like 80% fixed – 20% left and it is somehow related to multiple client sessions.

I will be in the office tomorrow to get some usb logs.

It is maybe worth to consider if RRF is low on OutputBuffers not to concat the gcode replys of multiple requests but instead drop the previos reply.

Btw. with my special python client connected (calling rr_reply as fast as possible when receiving the first HTTP 503) I am not able to get it crashed nor disconnected now.

Edit:

I stumbled over theMinimumBuffersForObjectModeland therr_modelrequest is checking if there are free buffers available, but what will happen the other way around? Like, query a rr_model and then from another socket a rr_gcode with large response in rr_reply?Tim

-

@timschneider said in [3.6.0-beta.3] DWC connection issues Duet2 / Duex5:

It is maybe worth to consider if RRF is low on OutputBuffers not to concat the gcode replys of multiple requests but instead drop the previos reply.

That's what happens. For the same reason the last M122 output is likely truncated if you send multiple M122s at once.

@timschneider said in [3.6.0-beta.3] DWC connection issues Duet2 / Duex5:

I stumbled over the MinimumBuffersForObjectModel and the rr_model request is checking if there are free buffers available, but what will happen the other way around? Like, query a rr_model and then from another socket a rr_gcode with large response in rr_reply?

rr_model waits half a second if it cannot allocate enough memory for the response and if it fails, HTTP 503 is returned. In that case a client should request the reply to free up the buffers again ASAP. I still think it would be good to add a new parameter to rr_connect to make RRF discard G-code replies for particular clients.

-

@chrishamm hm i don't know -

@chrishamm said in [3.6.0-beta.3] DWC connection issues Duet2 / Duex5:

rr_model waits half a second if it cannot allocate enough memory for the response and if it fails, HTTP 503 is returned. In that case a client should request the reply to free up the buffers again ASAP. I still think it would be good to add a new parameter to rr_connect to make RRF discard G-code replies for particular clients.

that would solve the problem with bot clients - but I was connected with two or three DWC sessions only.

Maybe add a timeout to rr_reply, after 500ms or 1s the reply will be discarded regardless of if it was fetched or not?! But anyhow maybe the problem is somewhere else, I was just speculating, because the problem now appears only with multiple sessions. -

@chrishamm said in [3.6.0-beta.3] DWC connection issues Duet2 / Duex5:

That's what happens. For the same reason the last M122 output is likely truncated if you send multiple M122s at once.

i tried to digg deeper into it and it looks like this is working even with multiple clients, but there is a behaviour I would not expect from RRF. It will serve the reply even after a reconnect / 3 timeouts (HTTP 503 on rr_model).

Maybe the firmware can drop pending gcode replies if it is short on memory and not able to serve multiple rr_model requests?

Maybe instead of dropping, turncat and leave a message why this happend. Like, "This is your response ... (turncated due to low memory)" that the pending reply will fit into exactly one output buffer.Or maybe concider setting

clientsServed++;on connect of new clients, why whould a new client be interested in an old pending reply?Guess that would help and only minor impack normal operation (will not happen on single client connections, and on multi client connections just sometimes for large replies)

-

@chrishamm

Today I was able to get new USB logs - the SPI timeout is still present in the last release.Printer was idle with one open session.

Error: failed to retrieve WiFi status message: SPI timeout<\n> === Diagnostics ===<\n> RepRapFirmware for Duet 2 WiFi/Ethernet version 3.6.0-beta.3+4 (2025-02-05 09:48:00) running on Duet WiFi 1.02 or later + DueX5v0.11<\n> Board ID: 08DJM-9178L-L4MSJ-6J1FL-3S86J-TB2LN<\n> Used output buffers: 1 of 26 (21 max)<\n> === RTOS ===<\n> Static ram: 23776<\n> Dynamic ram: 69864 of which 0 recycled<\n> Never used RAM 25444, free system stack 130 words<\n> Tasks: NETWORK(1,nWait 6,10.1%,211) HEAT(3,nWait 5,0.1%,283) Move(4,nWait 5,0.0%,264) DUEX(5,nWait 5,0.0%,23) MAIN(1,running,89.0%,698) IDLE(0,ready,0.8%,29), total 100.0%<\n> Owned mutexes: WiFi(NETWORK) USB(MAIN)<\n> === Platform ===<\n> Last reset 24:55:18 ago, cause: power up<\n> Last software reset at 2025-02-03 20:56, reason: User, Gcodes spinning, available RAM 24764, slot 1<\n> Software reset code 0x0003 HFSR 0x00000000 CFSR 0x00000000 ICSR 0x00400000 BFAR 0xe000ed38 SP 0x00000000 Task MAIN Freestk 0 n/a<\n> Error status: 0x00<\n> MCU temperature: min 26.2, current 29.5, max 30.4<\n> Supply voltage: min 23.7, current 24.0, max 24.3, under voltage events: 0, over voltage events: 0, power good: yes<\n> Heap OK, handles allocated/used 99/7, heap memory allocated/used/recyclable 2048/256/120, gc cycles 4227<\n> Events: 0 queued, 0 completed<\n> Date/time: 1970-01-01 00:00:00<\n> Slowest loop: 202.01ms; fastest: 0.12ms<\n> I2C nak errors 0, send timeouts 0, receive timeouts 0, finishTimeouts 0, resets 0<\n> === Storage ===<\n> Free file entries: 9<\n> SD card 0 detected, interface speed: 20.0MBytes/sec<\n> SD card longest read time 28.9ms, write time 101.0ms, max retries 0<\n> === Move ===<\n> Segments created 38, maxWait 41665760ms, bed comp in use: none, height map offset 0.000, hiccups added 0/0 (0.00ms), max steps late 0, ebfmin 0.00, ebfmax 0.00<\n> Pos req/act/dcf: 40320.00/40320/-0.00 -24320.00/-24320/-0.00 400.00/400/0.00<\n> no step interrupt scheduled<\n> Driver 0: standstill, SG min 177<\n> Driver 1: standstill, SG min 167<\n> Driver 2: standstill, SG min n/a<\n> Driver 3: standstill, SG min n/a<\n> Driver 4: standstill, SG min n/a<\n> Driver 5: standstill, SG min n/a<\n> Driver 6: standstill, SG min n/a<\n> Driver 7: standstill, SG min n/a<\n> Driver 8: standstill, SG min n/a<\n> Driver 9: standstill, SG min n/a<\n> Driver 10: <\n> Driver 11: <\n> === DDARing 0 ===<\n> Scheduled moves 15, completed 15, LaErrors 0, Underruns [0, 0, 0]<\n> Segments left 0<\n> Code queue is empty<\n> === Heat ===<\n> Bed heaters 0 -1 -1 -1, chamber heaters -1 -1 -1 -1, ordering errs 0<\n> === GCodes ===<\n> Movement locks held by null<\n> HTTP is idle in state(s) 0 0 0 0<\n> Telnet is idle in state(s) 0<\n> File is idle in state(s) 0<\n> USB is ready with "M122" in state(s) 0<\n> Aux is idle in state(s) 0<\n> Trigger is idle in state(s) 0<\n> Queue is idle in state(s) 0<\n> LCD is idle in state(s) 0<\n> Daemon is idle in state(s) 0<\n> Autopause is idle in state(s) 0<\n> === Filament sensors ===<\n> check 0 clear 319057472<\n> Extruder 0: pos 2160.00, errs: frame 0 parity 0 ovrun 0 pol 0 ovdue 0<\n> === DueX ===<\n> Read count 1, 0.00 reads/min<\n> === Network ===<\n> Slowest loop: 201.38ms; fastest: 0.00ms<\n> Responder states: HTTP(0) HTTP(0) HTTP(0) FTP(0) Telnet(0)<\n> HTTP sessions: 0 of 8<\n> === WiFi ===<\n> Interface state: active<\n> Module is connected to access point <\n> Failed messages: pending 0, notrdy 5, noresp 261465<\n> Failed to get WiFi status<\n> Socket states: 0 0 0 6 0 0 0 0<\n> ok<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> Error: failed to retrieve WiFi statuI was able to reset the WIFI module via USB with

M552 S-1 M552 S0 M552 S1WiFi module stopped<\n> ok<\n> Error: failed to retrieve WiFi status message: SPI timeout<\n> ok<\n> WiFi module started<\n> === Diagnostics ===<\n> RepRapFirmware for Duet 2 WiFi/Ethernet version 3.6.0-beta.3+4 (2025-02-05 09:48:00) running on Duet WiFi 1.02 or later + DueX5v0.11<\n> Board ID: 08DJM-9178L-L4MSJ-6J1FL-3S86J-TB2LN<\n> Used output buffers: 1 of 26 (21 max)<\n> === RTOS ===<\n> Static ram: 23776<\n> Dynamic ram: 69864 of which 0 recycled<\n> Never used RAM 25444, free system stack 130 words<\n> Tasks: NETWORK(1,ready,0.9%,211) HEAT(3,nWait 5,0.1%,283) Move(4,nWait 5,0.0%,264) DUEX(5,nWait 5,0.0%,23) MAIN(1,running,97.5%,698) IDLE(0,ready,1.5%,29), total 100.0%<\n> Owned mutexes: USB(MAIN)<\n> === Platform ===<\n> Last reset 24:57:54 ago, cause: power up<\n> Last software reset at 2025-02-03 20:56, reason: User, Gcodes spinning, available RAM 24764, slot 1<\n> Software reset code 0x0003 HFSR 0x00000000 CFSR 0x00000000 ICSR 0x00400000 BFAR 0xe000ed38 SP 0x00000000 Task MAIN Freestk 0 n/a<\n> Error status: 0x00<\n> MCU temperature: min 28.7, current 29.7, max 30.2<\n> Supply voltage: min 23.9, current 23.9, max 24.0, under voltage events: 0, over voltage events: 0, power good: yes<\n> Heap OK, handles allocated/used 99/7, heap memory allocated/used/recyclable 2048/1164/1028, gc cycles 4234<\n> Events: 0 queued, 0 completed<\n> Date/time: 1970-01-01 00:00:00<\n> Slowest loop: 99.34ms; fastest: 0.22ms<\n> I2C nak errors 0, send timeouts 0, receive timeouts 0, finishTimeouts 0, resets 0<\n> === Storage ===<\n> Free file entries: 9<\n> SD card 0 detected, interface speed: 20.0MBytes/sec<\n> SD card longest read time 2.3ms, write time 4.7ms, max retries 0<\n> === Move ===<\n> Segments created 38, maxWait 0ms, bed comp in use: none, height map offset 0.000, hiccups added 0/0 (0.00ms), max steps late 0, ebfmin 0.00, ebfmax 0.00<\n> Pos req/act/dcf: 40320.00/40320/-0.00 -24320.00/-24320/-0.00 400.00/400/0.00<\n> no step interrupt scheduled<\n> Driver 0: standstill, SG min n/a<\n> Driver 1: standstill, SG min n/a<\n> Driver 2: standstill, SG min n/a<\n> Driver 3: standstill, SG min n/a<\n> Driver 4: standstill, SG min n/a<\n> Driver 5: standstill, SG min n/a<\n> Driver 6: standstill, SG min n/a<\n> Driver 7: standstill, SG min n/a<\n> Driver 8: standstill, SG min n/a<\n> Driver 9: standstill, SG min n/a<\n> Driver 10: <\n> Driver 11: <\n> === DDARing 0 ===<\n> Scheduled moves 15, completed 15, LaErrors 0, Underruns [0, 0, 0]<\n> Segments left 0<\n> Code queue is empty<\n> === Heat ===<\n> Bed heaters 0 -1 -1 -1, chamber heaters -1 -1 -1 -1, ordering errs 0<\n> === GCodes ===<\n> Movement locks held by null<\n> HTTP is idle in state(s) 0 0 0 0<\n> Telnet is idle in state(s) 0<\n> File is idle in state(s) 0<\n> USB is ready with "M122" in state(s) 0<\n> Aux is idle in state(s) 0<\n> Trigger is idle in state(s) 0<\n> Queue is idle in state(s) 0<\n> LCD is idle in state(s) 0<\n> Daemon is idle in state(s) 0<\n> Autopause is idle in state(s) 0<\n> === Filament sensors ===<\n> check 0 clear 319632530<\n> Extruder 0: pos 2160.00, errs: frame 0 parity 0 ovrun 0 pol 0 ovdue 0<\n> === DueX ===<\n> Read count 0, 0.00 reads/min<\n> === Network ===<\n> Slowest loop: 200.20ms; fastest: 0.00ms<\n> Responder states: HTTP(0) HTTP(0) HTTP(0) FTP(0) Telnet(0)<\n> HTTP sessions: 0 of 8<\n> === WiFi ===<\n> Interface state: idle<\n> Module is idle<\n> Failed messages: pending 0, notrdy 0, noresp 0<\n> Firmware version 2.2.1<\n> MAC address 00:00:00:00:00:00<\n> Module reset reason: Turned on by main processor, Vcc 3.47, flash size 4194304, free heap 53932<\n> Clock register 00002002<\n> Socket states: 0 0 0 6 0 0 0 0<\n> ok<\n> ok<\n> WiFi module is connected to access point Meltingplot, IP address 10.42.0.2<\n>As this is a different bug than the large response one, should we keep track in a different thread, @chrishamm @T3P3Tony ?

-

@timschneider yes please

-

undefined timschneider referenced this topic

undefined timschneider referenced this topic