Setting tool offset using USB microscope

-

@Danal that is brilliant! Didnt think of using the z piezo probe to do x and y offset. I will look to impliment something like this.

-

@Danal Are you sure you can get the X/Y offset that precise. I am asking because it really depends on where you hit such a nozzle tip.

-

@tekstyle said in Setting tool offset using USB microscope:

@Danal that is brilliant! Didnt think of using the z piezo probe to do x and y offset. I will look to impliment something like this.

There is no piezo involved. It is simple make/break a circuit between the brass nozzle and the touchplate.

-

@TC said in Setting tool offset using USB microscope:

@Danal Are you sure you can get the X/Y offset that precise. I am asking because it really depends on where you hit such a nozzle tip.

XY hits the conical side of the nozzle, not the tip. The exact taper doesn't matter, because the algorithm touches both sides. It only needs to be symmetric with itself. It does not need to be the same as the "next" or "prior" tool.

-

@Danal interesting but that requires the nozzle to be perfectly perpendicular, because otherwise you get an additional offset you dont detect, right?

-

@TC said in Setting tool offset using USB microscope:

@Danal interesting but that requires the nozzle to be perfectly perpendicular, because otherwise you get an additional offset you dont detect, right?

"Perfectly" is pretty strong... you are correct. it does need to be close to 90 to the surface. Eyeball of the whole hot end seems to work.

-

@Danal ok^^ did you already test this procedure? I find it very inspiring.

-

@TC said in Setting tool offset using USB microscope:

@Danal ok^^ did you already test this procedure? I find it very inspiring.

Yes. I've been aligning this way.

Although... I am starved for time... and I've also been testing cameras. So I wouldn't claim any of this is perfected. Both touchplate and camera look promising.

-

@Danal I am working with a USB microscope right now but so far allways have to do manual fine tuning be printing test objects...

-

@TC said in Setting tool offset using USB microscope:

@Danal I am working with a USB microscope right now but so far allways have to do manual fine tuning be printing test objects...

My final target is full automation. I've found that the various microscopes have such a narrow field of view that "starting from scratch" with no offsets (yet), it is often true that if you put T0 over the scope, write down the coordinates (or script capture them), and put T1 in that same position, it won't even be in the field of view.

Therefore, I've switched to the Logitech C270. That specific one because it has a threaded lens held captive by a dot of hot-melt (or similar). It is easy to open up, turn the lens, and get it to close focus.

Anyway, using this camera, and an overlay circle (placed there with openCV), I've found that I can be repeatable to better than the tool-tool repeatability of the printer.

So, yeah, no printing test objects.

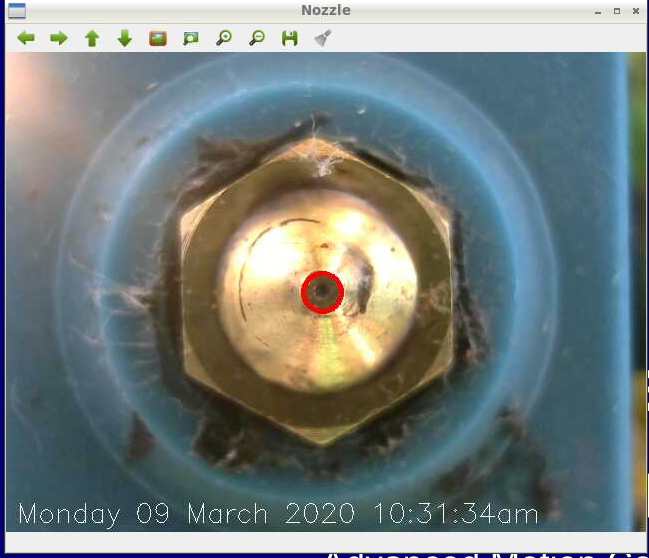

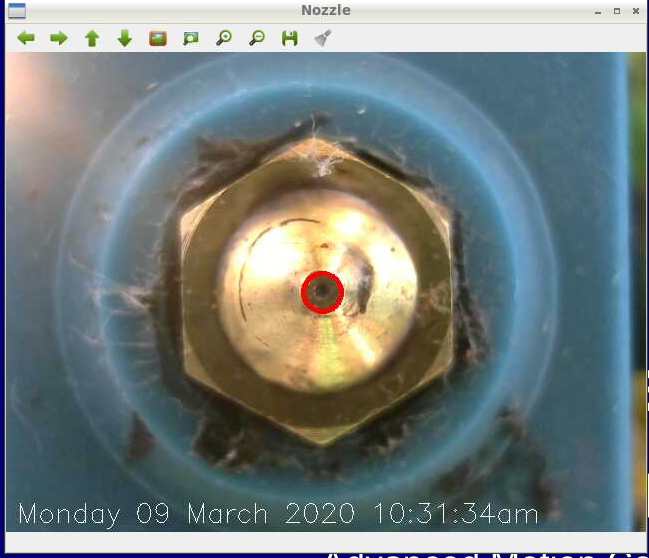

C270 image:

-

While this looks like the other pic, this is machine vision "finding" the end of the nozzle. This also produces a coordinate (in pixels), and you can see that averaging these over a bunch of reads can get it nailed down:

316.0 240.0

316.0 240.0

315.88 239.88

316.0 239.75

316.0 240.0

316.0 240.0

316.0 240.0I moved the nozzle 0.1mm, and the readings (in pixels) changed to:

312.0 238.0

312.0 238.0appears to be about -4 x -2 pixels, so about 4.472 on the diagonal. Part of the work that needs to be done is figuring out how to "calibrate" movement in Pixels to movement in MM, when this is installed on a bunch of peoples printers, with different focus, and maybe even different cameras.

-

i have a raspberry pi that i used to run octoprint with marlin. i might pick up a c270 to get this to work if i can't use the current raspberry pi camera with a phone macro lens.

-

@tekstyle said in Setting tool offset using USB microscope:

i have a raspberry pi that i used to run octoprint with marlin. i might pick up a c270 to get this to work if i can't use the current raspberry pi camera with a phone macro lens.

My github has a script that interfaces with a Duet, that is it MUST run on a D3/Pi combo connected with SPI cable... and it has another script that will work for (almost) any printer, because that script asks you to type in the coordinates as it steps you through the alignment. Meaning the Pi need not be connected to anything (beyond the camera).

So you can use whichever, if you wish.

On the C270, take it apart and screw the lens in (or was it out?) until it focuses within a few inches. Works great.

-

i got installopencv.sh installed on my raspberry pi. i have a Geany under programming. what do I do wiht the.py files? i have a duet2wifi

-

For duet 2, the only one that will really work is DuetPython/toolAlignImage.py

You will need either a physical console, or VNC. It has to be a screen that can display a video window. There are tons of tutorials on how to set those up. Once you have a session running on the graphics screen (not via ssh), change into that directory and enter:

python3 toolAlignImage.py

Within a few seconds, an image from your USB camera will appear (USB only, not PiCam). This camera should be a "close focus", and I still recommend the Logitech C270, removed from its case, and the lens screwed in (or out, I don't remember) until it focuses about 3 to 4 cm (inch and a half in barbarian units) from the lens. You can fine tune this once the camera is mounted.

Once you have an image up, follow the prompts in the script. It will have you do things, and enter numbers from the web console. In reality, this will work with ANY printer that mounts tools, you can jog, and it displays positions.

Roughly, it will have you jog each tool into place, and center it exactly, and enter the X and Y shown on the screen.

IMPORTANT: At the beginning, it will have you enter G10 Px X0Y0 for each tool. DO NOT SKIP THIS, it is easy to miss.At the end, it will give you your G10 commands. NOTE: I MAY NEED TO UPDATE THE CALCULATIONS, I made some interesting discoveries lately. It might work... I will look at it tomorrow and let you know. (or update GIT).

Example camera mount. This really should be narrower, I quickly re-used it from another project.

STLs for that camera mount:

-

And... if the script doesn't work completely correctly, as long as it displays the live video stream with the green circle, you could write all the X and Y on a piece of paper and do the math yourself.

In fact, just added a script called videoOnly.py. If nothing else works, use that and pencil and paper.

-

@Danal said in Setting tool offset using USB microscope:

For duet 2, the only one that will really work is DuetPython/toolAlignImage.py

You will need either a physical console, or VNC. It has to be a screen that can display a video window. There are tons of tutorials on how to set those up. Once you have a session running on the graphics screen (not via ssh), change into that directory and enter:

python3 toolAlignImage.pythis is what i am getting now. I am connected to raspberry pi via keyboard, mouse, and a monitor.

pi@raspberrypi:~/Desktop/Duet $ python3 toolAlignImage.py

Traceback (most recent call last):

File "toolAlignImage.py", line 11, in <module>

import cv2

ModuleNotFoundError: No module named 'cv2' -

@tekstyle said in Setting tool offset using USB microscope:

i got installopencv.sh installed on my raspberry pi. i have a Geany under programming. what do I do wiht the.py files? i have a duet2wifi

Did you run that script? It takes upwards of an hour. Command to run it:

cd to the directory where it is.

./installopencv.sh

-

@Danal said in Setting tool offset using USB microscope:

While this looks like the other pic, this is machine vision "finding" the end of the nozzle. This also produces a coordinate (in pixels), and you can see that averaging these over a bunch of reads can get it nailed down:

316.0 240.0

316.0 240.0

315.88 239.88

316.0 239.75

316.0 240.0

316.0 240.0

316.0 240.0I moved the nozzle 0.1mm, and the readings (in pixels) changed to:

312.0 238.0

312.0 238.0appears to be about -4 x -2 pixels, so about 4.472 on the diagonal. Part of the work that needs to be done is figuring out how to "calibrate" movement in Pixels to movement in MM, when this is installed on a bunch of peoples printers, with different focus, and maybe even different cameras.

Danal,

Just out of curiosity and for my own understanding.I'm not sure if the nozzle is being moved by the Duet drivers or not? But if it is moved by the Duet drivers then after you lock in on the location of the nozzle can't you just get the XY location from the Duet, do the location (offset) calculations and then send it back into the Duet with the G10 command to set the proper offset?

Then the pixels to mm conversion would be irrelevant. Even different camera focus lengths wouldn't really matter. You can poll the Duet for it's XY location after the alignment is locked.

-

In the final alignment, yes, getting the position from the duet will work fine. No pixel/mm mapping required.

At the same time, lets assume the video image is 720 by 480. We find a circle with center, say 100 200. What is a good movement to get the nozzle closer to the circle being 720/2 480/2 (i.e. centered)? 1MM? 10mm? .1?

Perhaps the best way is to avoid camera to mm mapping at all, and just make a reasonable guess for the first move (1mm) and loop through successive approximations.

Perhaps the initial move could be 1mm, and establish a rough mm to pixel mapping, and the next move could be really close, and the final centering could be successive approximation.

These are all valid design ideas. Some involve the mapping, some don't. I'll need to play around with it and see what really works.

A separate, but related, challenge comes with the "out of the box" OpenCV "find a circle" function. It has several oddities.

First, it is REALLY good at finding circles. Glare on the nozzle will often result in several circles returned. We need it to find just the nozzle. One of the best ways to do that is to limit a min and max radius of found circles in pixels. I've hand tuned my examples, but I need to find a way to make this work for (almost) everyone.

Second, it is SLOW. The more constraints you add (like min/max from point 1 above) the slower it gets. A frame rate of about 2 a second is really good when searching with constraints. It gets even slower if it does not find circles (I assume it keeps looking). I am seriously considering modify the library to return a null if a certain amount of time passes and no circles have been found.

Combine these two things and getting it to find the actual nozzle, once and only once, is a challenge that I'm still researching.

One thought that I have not looked at at all: Are there other circle search functions built on OpenCV, beyond the one that comes with OCV?

The real key to all of this is time. At this moment, I'm rebuilding the printer with which I was doing most of these experiments. Maybe in a few days I can try some of this again.